MEG matters

MIT’s new MEG lab is starting to deliver results.

Somewhere nearby, most likely, sits a coffee mug. Give it a glance. An image of that mug travels from desktop to retina and into the brain, where it is processed, categorized and recognized, within a fraction of a second.

All this feels effortless to us, but programming a computer to do the same reveals just how complex that process is. Computers can handle simple objects in expected positions, such as an upright mug. But tilt that cup on its side? “That messes up a lot of standard computer vision algorithms,” says Leyla Isik, a graduate student in Tomaso Poggio’s lab at the McGovern Institute.

For her thesis research, Isik is working to build better computer vision models, inspired by how human brains recognize objects. But to track this process, she needed an imaging tool that could keep up with the brain’s astonishing speed. In 2011, soon after Isik arrived at MIT, the McGovern Institute opened its magnetoencephalography (MEG) lab, one of only a few dozens in the entire country. MEG operates on the same timescale as the human brain. Now, with easy access to a MEG facility dedicated to brain research, neuroscientists at McGovern and across MIT—even those like Isik who had never scanned human subjects—are delving into human neural processing in ways never possible before.

The making of…

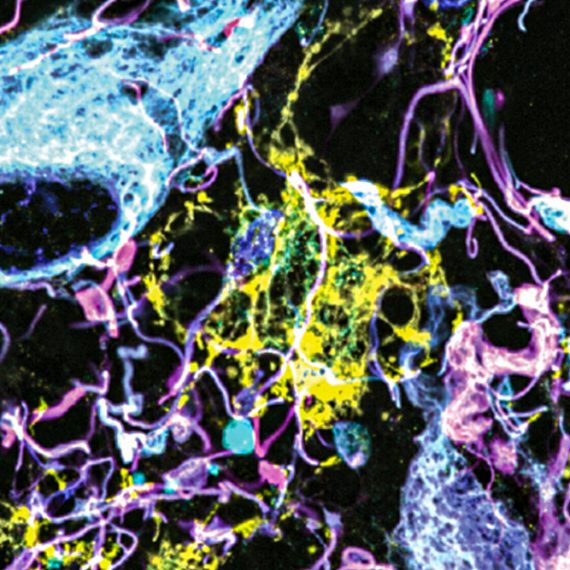

MEG was developed at MIT in the early 1970s by physicist David Cohen. He was searching for the tiny magnetic fields that were predicted to arise within electrically active tissues such as the brain. Magnetic fields can travel unimpeded through the skull, so Cohen hoped it might be possible to detect them noninvasively. Because the signals are so small—a billion times weaker than the magnetic field of the Earth—Cohen experimented with a newly invented device called a SQUID (short for superconducting quantum interference device), a highly sensitive magnetometer. In 1972, he succeeded in recording alpha waves, brain rhythms that occur when the eyes close. The recording scratched out on yellow graph paper with notes scrawled in the margins, led to a seminal paper that launched a new field. Cohen’s prototype has now evolved into a sophisticated machine with an array of 306 SQUID detectors contained within a helmet that sits over the subject’s head like a giant hairdryer.

As MEG technology advanced, neuroscientists watched with growing interest. Animal studies were revealing the importance of high-frequency electrical oscillations such as gamma waves, which appear to have a key role in the communication between different brain regions. But apart from occasional neurosurgery patients, it was very difficult to study these signals in the human brain or to understand how they might contribute to human cognition. The most widely used imaging method, functional magnetic resonance imaging (fMRI) could provide precise spatial localization, but it could not detect events on the necessary millisecond timescale. “We needed to bridge that gap,” says Robert Desimone, director of the McGovern Institute.

Desimone decided to make MEG a priority, and with support from donors including Thomas F. Peterson, Jr., Edward and Kay Poitras, and the Simons Foundation, the institute was able to purchase a Triux scanner from Elekta, the newest model on the market and the first to be installed in North America.

One challenge was the high level of magnetic background noise from the surrounding environment, and so the new scanner was installed in a 13-ton shielded room that deflects interference away from the scanner. “We have a challenging location, but we were able to work with it and to get clear signals,” says Desimone.

“An engineer might have picked a different site, but we cannot overstate the importance of having MEG right here, next to the MRI scanners and easily accessible for our researchers.”

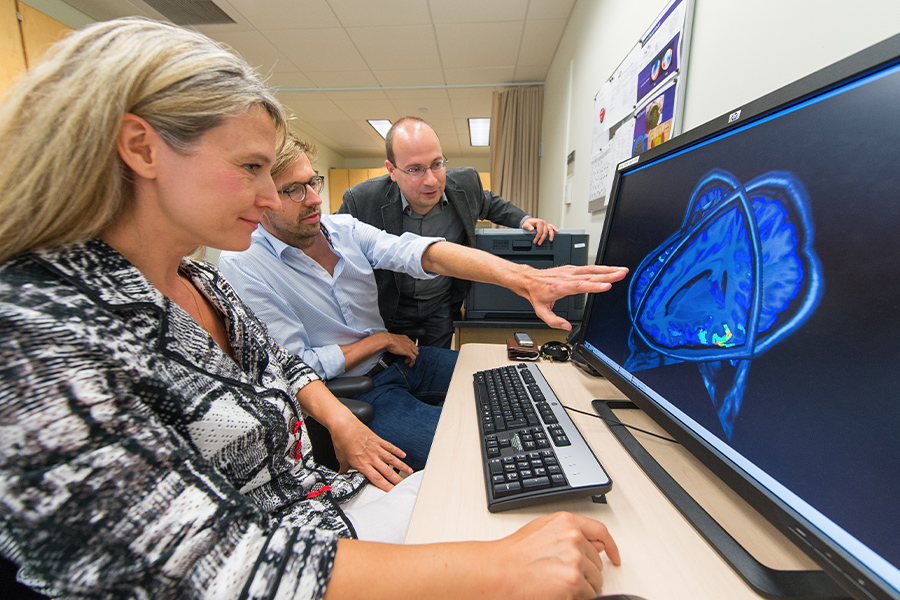

To run the new lab, Desimone recruited Dimitrios Pantazis, an expert in MEG signal processing from the University of Southern California. Pantazis knew a lot about MEG data analysis, but he had never actually scanned human subjects himself. In March 2011, he watched in anticipation as Elekta engineers uncrated the new system. Within a few months, he had the lab up and running.

Computer vision quest

When the MEG lab opened, Isik attended a training session. Like Pantazis, she had no previous experience scanning human subjects, but MEG seemed an ideal tool for teasing out the complexities of human object recognition.

She recorded the brain activity of volunteers as they viewed images of objects in various orientations. She also asked them to track the color of a cross on each image, partly to keep their eyes on the screen and partly to keep them alert. “It’s a dark and quiet room and a comfy chair,” she says. “You have to give them something to do to keep them awake.”

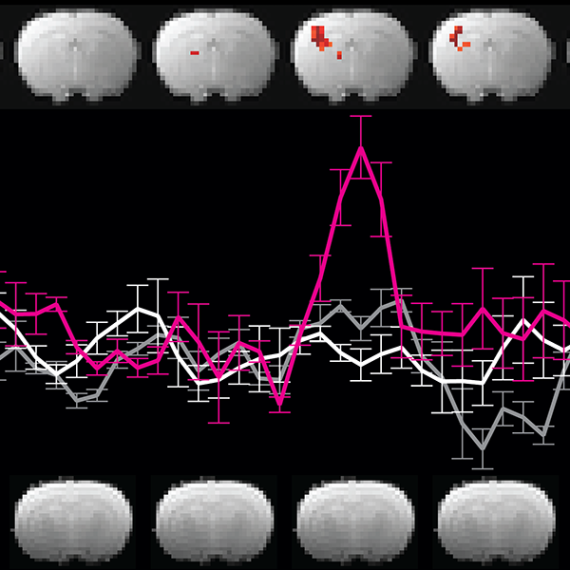

To process the data, Isik used a computational tool called a machine learning classifier, which learns to recognize patterns of brain activity evoked by different stimuli. By comparing responses to different types of objects, or similar objects from different viewpoints (such as a cup lying on its side), she was able to show that the human visual system processes objects in stages, starting with the specific view and then generalizing to features that are independent of the size and position of the object.

Isik is now working to develop a computer model that simulates this step-wise processing. “Having this data to work with helps ground my models,” she says. Meanwhile, Pantazis was impressed by the power of machine learning classifiers to make sense of the huge quantities of data produced by MEG studies. With support from the National Science Foundation, he is working to incorporate them into a software analysis package that is widely used by the MEG community.

Mixology

Because fMRI and MEG provide complementary information, it was natural that researchers would want to combine them. This is a computationally challenging task, but MIT research scientist Aude Oliva and postdoc Radoslaw Cichy, in collaboration with Pantazis, have developed a new way to do so. They presented 92 images to volunteers subjects, once in the MEG scanner, and then again in the MRI scanner across the hall. For each data set, they looked for patterns of similarity between responses to different stimuli. Then, by aligning the two ‘similarity maps,’ they could determine which MEG signals correspond to which fMRI signals, providing information about the location and timing of brain activity that could not be revealed by either method in isolation. “We could see how visual information flows from the rear of the brain to the more anterior regions where objects are recognized and categorized,” says Pantazis. “It all happens within a few hundred milliseconds. You could not see this level of detail without the combination of fMRI and MEG.”

Another study combining fMRI and MEG data focused on attention, a longstanding research interest for Desimone. Daniel Baldauf, a postdoc in Desimone’s lab, shares that fascination. “Our visual experience is amazingly rich,” says Baldauf. “Most mysteries about how we deal with all this information boil down to attention.”

Baldauf set out to study how the brain switches attention between two well-studied object categories, faces and houses. These stimuli are known to be processed by different brain areas, and Baldauf wanted to understand how signals might be routed to one area or the other during shifts of attention. By scanning subjects with MEG and fMRI, Baldauf identified a brain region, the inferior frontal junction (IFJ), that synchronizes its gamma oscillations with either the face or house areas depending on which stimulus the subject was attending to—akin to tuning a radio to a particular station.

Having found a way to trace attention within the brain, Desimone and his colleagues are now testing whether MEG can be used to improve attention. Together with Baldauf and two visiting students, Yasaman Bagherzadeh and Ben Lu, he has rigged the scanner so that subjects can be given feedback on their own activity on a screen in real time as it is being recorded. “By concentrating on a task, participants can learn to steer their own brain activity,” says Baldauf, who hopes to determine whether these exercises can help people perform better on everyday tasks that require attention.

Comfort zone

In addition to exploring basic questions about brain function, MEG is also a valuable tool for studying brain disorders such as autism. Margaret Kjelgaard, a clinical researcher at Massachusetts General Hospital, is collaborating with MIT faculty member Pawan Sinha to understand why people with autism often have trouble tolerating sounds, smells, and lights. This is difficult to study using fMRI, because subjects are often unable to tolerate the noise of the scanner, whereas they find MEG much more comfortable.

“Big things are probably going to happen here.”

— David Cohen, inventor of MEG technology

In the scanner, subjects listened to brief repetitive sounds as their brain responses were recorded. In healthy controls, the responses became weaker with repetition as the subjects adapted to the sounds. Those with autism, however, did not adapt. The results are still preliminary and as-yet unpublished, but Kjelgaard hopes that the work will lead to a biomarker for autism, and perhaps eventually for other disorders. In 2012, the McGovern Institute organized a symposium to mark the opening of the new lab. Cohen, who had invented MEG forty years earlier, spoke at the event and made a prediction: “Big things are probably going to happen here.” Two years on, researchers have pioneered new MEG data analysis techniques, invented novel ways to combine MEG and fMRI, and begun to explore the neural underpinnings of autism. Odds are, there are more big things to come.