Signs of COVID19 may be hidden in speech signals

Processing vocal recordings of infected but asymptomatic people reveals potential indicators of Covid-19.

It’s often easy to tell when colleagues are struggling with a cold — they sound sick. Maybe their voices are lower or have a nasally tone. Infections change the quality of our voices in various ways. But MIT Lincoln Laboratory researchers are detecting these changes in Covid-19 patients even when these changes are too subtle for people to hear or even notice in themselves.

By processing speech recordings of people infected with Covid-19 but not yet showing symptoms, these researchers found evidence of vocal biomarkers, or measurable indicators, of the disease. These biomarkers stem from disruptions the infection causes in the movement of muscles across the respiratory, laryngeal, and articulatory systems. A technology letter describing this research was recently published in IEEE Open Journal of Engineering in Medicine and Biology.

While this research is still in its early stages, the initial findings lay a framework for studying these vocal changes in greater detail. This work may also hold promise for using mobile apps to screen people for the disease, particularly those who are asymptomatic.

Talking heads

“I had this ‘aha’ moment while I was watching the news,” says Thomas Quatieri, a senior staff member in the laboratory’s Human Health and Performance Systems Group. Quatieri has been leading the group’s research in vocal biomarkers for the past decade; their focus has been on discovering vocal biomarkers of neurological disorders such as amyotrophic lateral sclerosis (ALS) and Parkinson’s disease. These diseases, and many others, change the brain’s ability to turn thoughts into words, and those changes can be detected by processing speech signals.

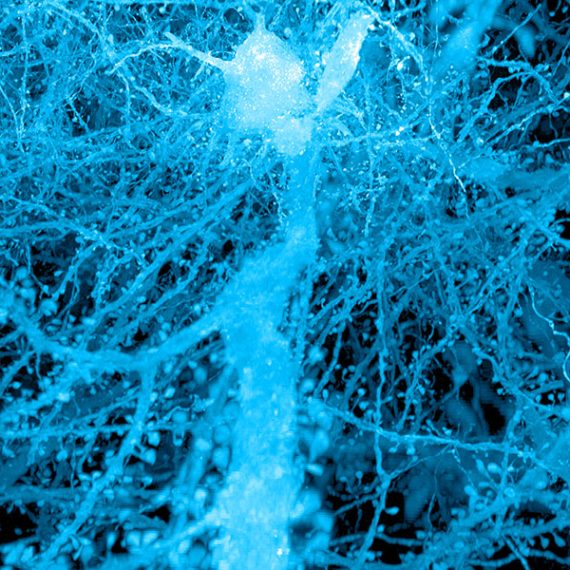

He and his team wondered whether vocal biomarkers might also exist for COVID19. The symptoms led them to think so. When symptoms manifest, a person typically has difficulty breathing. Inflammation in the respiratory system affects the intensity with which air is exhaled when a person talks. This air interacts with hundreds of other potentially inflamed muscles on its journey to speech production. These interactions impact the loudness, pitch, steadiness, and resonance of the voice — measurable qualities that form the basis of their biomarkers.

While watching the news, Quatieri realized there were speech samples in front of him of people who had tested positive for COVID19. He and his colleagues combed YouTube for clips of celebrities or TV hosts who had given interviews while they were COVID19 positive but asymptomatic. They identified five subjects. Then, they downloaded interviews of those people from before they had COVID19, matching audio conditions as best they could.

They then used algorithms to extract features from the vocal signals in each audio sample. “These vocal features serve as proxies for the underlying movements of the speech production systems,” says Tanya Talkar, a PhD candidate in the Speech and Hearing Bioscience and Technology program at Harvard University.

The signal’s amplitude, or loudness, was extracted as a proxy for movement in the respiratory system. For studying movements in the larynx, they measured pitch and the steadiness of pitch, two indicators of how stable the vocal cords are. As a proxy for articulator movements — like those of the tongue, lips, jaw, and more — they extracted speech formants. Speech formants are frequency measurements that correspond to how the mouth shapes sound waves to create a sequence of phonemes (vowels and consonants) and to contribute to a certain vocal quality (nasally versus warm, for example).

They hypothesized that Covid19 inflammation causes muscles across these systems to become overly coupled, resulting in a less complex movement. “Picture these speech subsystems as if they are the wrist and fingers of a skilled pianist; normally, the movements are independent and highly complex,” Quatieri says. Now, picture if the wrist and finger movements were to become stuck together, moving as one. This coupling would force the pianist to play a much simpler tune.

The researchers looked for evidence of coupling in their features, measuring how each feature changed in relation to another in 10 millisecond increments as the subject spoke. These values were then plotted on an eigenspectrum; the shape of this eigenspectrum plot indicates the complexity of the signals. “If the eigenspace of the values forms a sphere, the signals are complex. If there is less complexity, it might look more like a flat oval,” Talkar says.

In the end, they found a decreased complexity of movement in the Covid-19 interviews as compared to the pre-Covid-19 interviews. “The coupling was less prominent between larynx and articulator motion, but we’re seeing a reduction in complexity between respiratory and larynx motion,” Talkar says.

Early detections

These preliminary results hint that biomarkers derived from vocal system coordination can indicate the presence of Covid-19. However, the researchers note that it’s still early to draw conclusions, and more data are needed to validate their findings. They’re working now with a publicly released dataset from Carnegie Mellon University that contains audio samples from individuals who have tested positive for COVID19.

Beyond collecting more data to fuel this research, the team is looking at using mobile apps to implement it. A partnership is underway with Satra Ghosh at the MIT McGovern Institute for Brain Research to integrate vocal screening for Covid-19 into its VoiceUp app, which was initially developed to study the link between voice and depression. A follow-on effort could add this vocal screening into the How We Feel app. This app asks users questions about their daily health status and demographics, with the aim to use these data to pinpoint hotspots and predict the percentage of people who have the disease in different regions of the country. Asking users to also submit a daily voice memo to screen for biomarkers of Covid-19 could potentially help scientists catch on to an outbreak.

“A sensing system integrated into a mobile app could pick up on infections early, before people feel sick or, especially, for these subsets of people who don’t ever feel sick or show symptoms,” says Jeffrey Palmer, who leads the research group. “This is also something the U.S. Army is interested in as part of a holistic Covid-19 monitoring system.” Even after a diagnosis, this sensing ability could help doctors remotely monitor their patients’ progress or monitor the effects of a vaccine or drug treatment.

As the team continues their research, they plan to do more to address potential confounders that could cause inaccuracies in their results, such as different recording environments, the emotional status of the subjects, or other illnesses causing vocal changes. They’re also supporting similar research. The Mass General Brigham Center for COVID Innovation has connected them to international scientists who are following the team’s framework to analyze coughs.

“There are a lot of other interesting areas to look at. Here, we looked at the physiological impacts on the vocal tract. We’re also looking to expand our biomarkers to consider neurophysiological impacts linked to Covid-19, like the loss of taste and smell,” Quatieri says. “Those symptoms can affect speaking, too.”