Jaqueline Lees and Rebecca Saxe have been named associate deans serving in the MIT School of Science. Lees is the Virginia and D.K. Ludwig Professor for Cancer Research and is currently the associate director of the Koch Institute for Integrative Cancer Research, as well as an associate department head and professor in the Department of Biology at MIT. Saxe is the John W. Jarve (1978) Professor in Brain and Cognitive Sciences and the associate head of the Department of Brain and Cognitive Sciences (BCS); she is also an associate investigator in the McGovern Institute for Brain Research.

Lees and Saxe will both contribute to the school’s diversity, equity, inclusion, and justice (DEIJ) activities, as well as develop and implement mentoring and other career-development programs to support the community. From their home departments, Saxe and Lees bring years of DEIJ and mentorship experience to bear on the expansion of school-level initiatives.

Lees currently serves on the dean’s science council in her capacity as associate director of the Koch Institute. In this new role as associate dean for the School of Science, she will bring her broad administrative and programmatic experiences to bear on the next phase for DEIJ and mentoring activities.

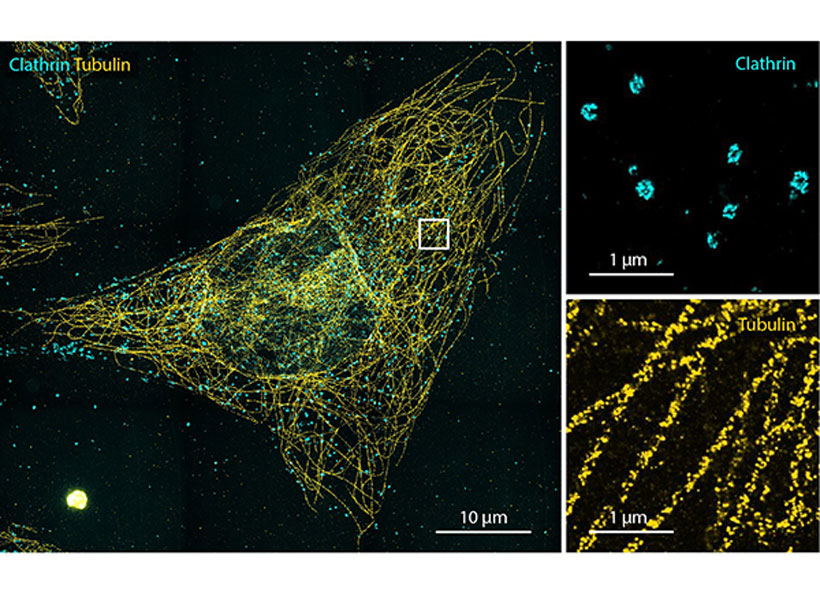

Lees joined MIT in 1994 as a faculty member in MIT’s Koch Institute (then the Center for Cancer Research) and Department of Biology. Her research focuses on regulators that control cellular proliferation, terminal differentiation, and stemness — functions that are frequently deregulated in tumor cells. She dissects the role of these proteins in normal cell biology and development, and establish how their deregulation contributes to tumor development and metastasis.

Since 2000, she has served on the Department of Biology’s graduate program committee, and played a major role in expanding the diversity of the graduate student population. Lees also serves on DEIJ committees in her home department, as well as at the Koch Institute.

With co-chair with Boleslaw Wyslouch, director of the Laboratory for Nuclear Science, Lees led the ReseArch Scientist CAreer LadderS (RASCALS) committee tasked to evaluate career trajectories for research staff in the School of Science and make recommendations to recruit and retain talented staff, rewarding them for their contributions to the school’s research enterprise.

“Jackie is a powerhouse in translational research, demonstrating how fundamental work at the lab bench is critical for making progress at the patient bedside,” says Nergis Mavalvala, dean of the School of Science. “With Jackie’s dedicated and thoughtful partnership, we can continue to lead in basic research and develop the recruitment, retention, and mentoring and necessary to support our community.”

Saxe will join Lees in supporting and developing programming across the school that could also provide direction more broadly at the Institute.

“Rebecca is an outstanding researcher in social cognition and a dedicated educator — someone who wants our students not only to learn, but to thrive,” says Mavalvala. “I am grateful that Rebecca will join the dean’s leadership team and bring her mentorship and leadership skills to enhance the school.”

For example, in collaboration with former department head James DiCarlo, the BCS department has focused on faculty mentorship of graduate students; and, in collaboration with Professor Mark Bear, the department developed postdoc salary and benefit standards. Both initiatives have become models at MIT.

With colleague Laura Schulz, Saxe also served as co-chair of the Committee on Medical Leave and Hospitalizations (CMLH), which outlined ways to enhance MIT’s current leave and hospitalization procedures and policies for undergraduate and graduate students. Saxe was also awarded MIT’s Committed to Caring award for excellence in graduate student mentorship, as well as the School of Science’s award for excellence in undergraduate teaching.

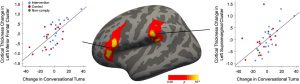

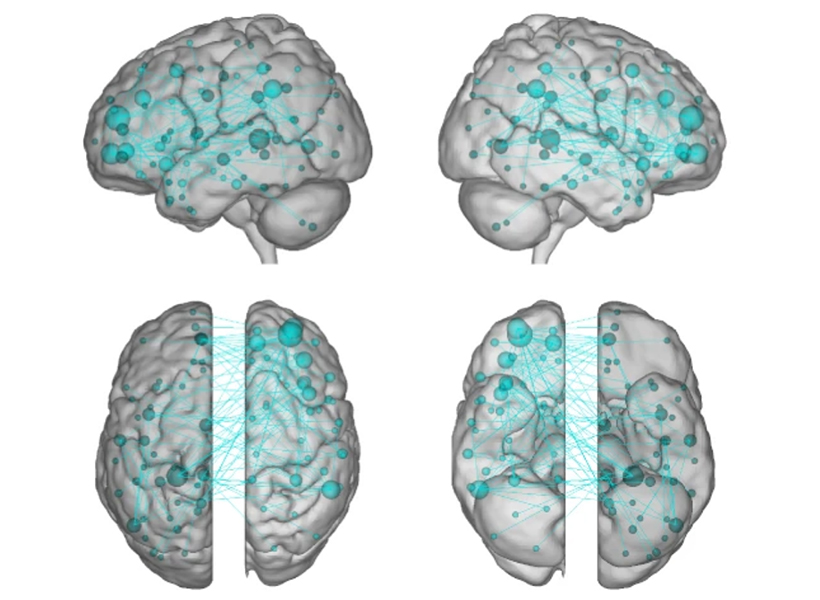

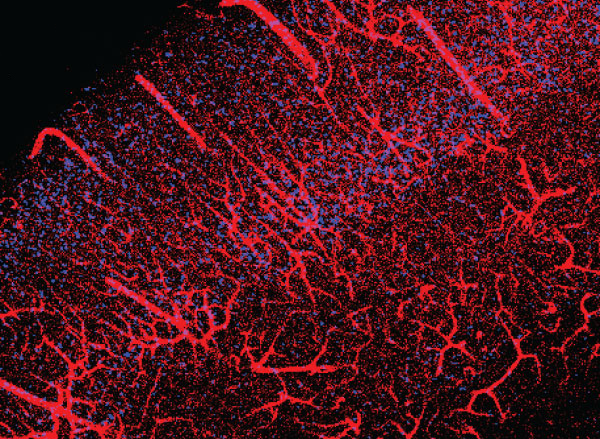

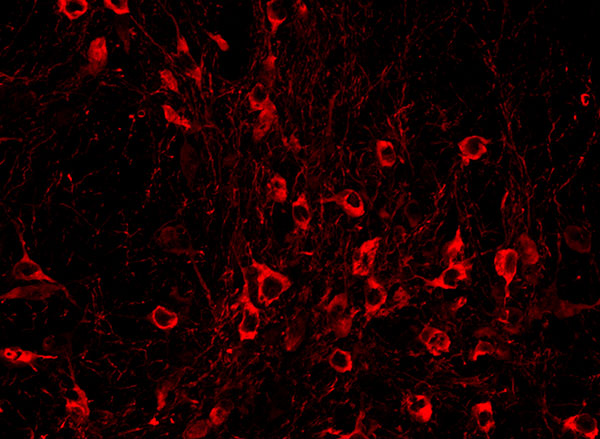

In her research, Saxe studies human social cognition, using a combination of behavioral testing and brain imaging technologies. She is best known for her work on brain regions specialized for abstract concepts, such as “theory of mind” tasks that involve understanding the mental states of other people. Her TED Talk, “How we read each other’s minds” has been viewed more than 3 million times. She also studies the development of the human brain during early infancy.

She obtained her PhD from MIT and was a Harvard University junior fellow before joining the MIT faculty in 2006. In 2014, the National Academy of Sciences named her one of two recipients of the Troland Award for investigators age 40 or younger “to recognize unusual achievement and further empirical research in psychology regarding the relationships of consciousness and the physical world.” In 2020, Saxe was named a John Simon Guggenheim Foundation Fellow.

Saxe and Lees will also work closely with Kuheli Dutt, newly hired assistant dean for diversity, equity, and inclusion, and other members of the dean’s science council on school-level initiatives and strategy.

“I’m so grateful that Rebecca and Jackie have agreed to take on these new roles,” Mavalvala says. “And I’m super excited to work with these outstanding thought partners as we tackle the many puzzles that I come across as dean.”