The ability to use language to communicate is one of things that makes us human. At MIT’s McGovern Institute, scientists led by Evelina Fedorenko have defined an entire network of areas within the brain dedicated to this ability, which work together when we speak, listen, read, write, or sign.

Much of the language network lies within the brain’s neocortex, where many of our most sophisticated cognitive functions are carried out. Now, Fedorenko’s lab, which is part of MIT’s Department of Brain and Cognitive Sciences, has identified language-processing regions within the cerebellum, extending the language network to a part of the brain better known for helping to coordinate the body’s movements. Their findings are reported January 21, 2026, in the journal Neuron.

“It’s like there’s this region in the cerebellum that we’ve been forgetting about for a long time,” says Colton Casto, a graduate student at Harvard and MIT who works in Fedorenko’s lab. “If you’re a language researcher, you should be paying attention to the cerebellum.”

Imaging the language network

There have been hints that the cerebellum makes important contributions to language. Some functional imaging studies detected activity in this area during language use, and people who suffer damage to the cerebellum sometimes experience language impairments. But no one had been able to pin down exactly which parts of the cerebellum were involved or tease out their roles in language processing.

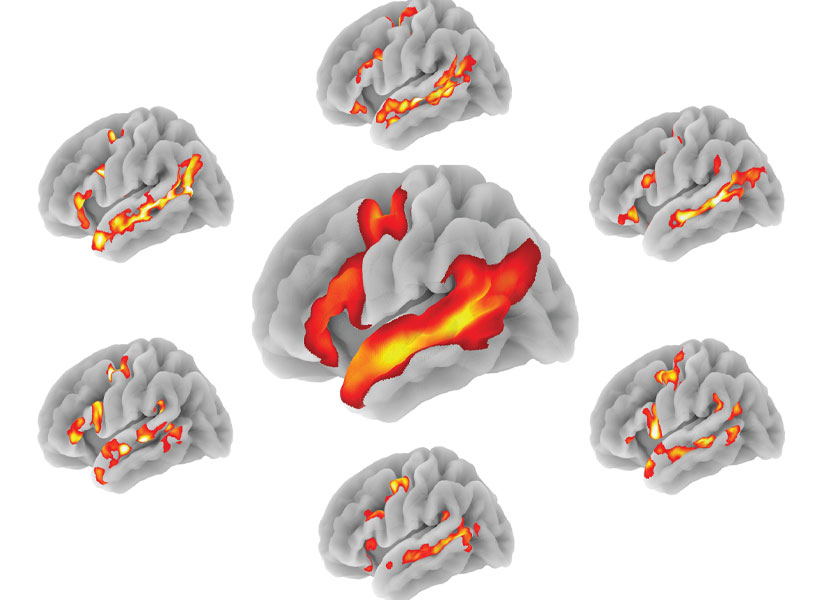

To get some answers, Fedorenko’s lab took a systematic approach, using methods they have used to map the language network in the neocortex. For 15 years, the lab has captured functional brain imaging data as volunteers carried out various tasks inside an MRI scanner. By monitoring brain activity as people engaged in different kinds of language tasks, like reading sentences or listening to spoken words, as well as non-linguistic tasks, like listening to noise or memorizing spatial patterns, the team has been able identify parts of the brain that are exclusively dedicated to language processing.

Their work shows that everyone’s language network uses the same neocortical regions. The precise anatomical location of these regions varies, however, so to study the language network in any individual, Fedorenko and her team must map that person’s network inside an MRI scanner using their language-localizer tasks.

Satellite language network

While the Fedorenko lab has largely focused on how the neocortex contributes to language processing, their brain scans also capture activity in the cerebellum. So Casto revisited those scans, analyzing cerebellar activity from more than 800 people to look for regions involved in language processing. Fedorenko points out that teasing out the individual anatomy of the language network turned out to particularly vital in the cerebellum, where neurons are densely packed and areas with different functional specializations sit very close to one another. Ultimately, Casto was able to identify four cerebellar areas that consistently got involved during language use.

Three of these regions were clearly involved in language use, but also reliably became engaged during certain kinds of non-linguistic tasks. Casto says this was a surprise, because all the core language areas in the neocortex are dedicated exclusively to language processing. The researchers speculate that the cerebellum may be integrating information from different parts of the cortex—a function that could be important for many cognitive tasks.

“We’ve found that language is distinct from many, many other things—but at some point, complex cognition requires everything to work together,” Fedorenko says. “How do these different kinds of information get connected? Maybe parts of the cerebellum serve that function.”

The researchers also found a spot in the right posterior cerebellum with activity patterns that more closely echoed those of the language network in the neocortex. This region stayed silent during non-linguistic tasks, but became active during language use. For all of the linguistic activities that Casto analyzed, this region exhibited patterns of activity that were very similar to what the lab has seen in neocortical components of the language network. “Its contribution to language seems pretty similar,” Casto says. The team describes this area as a “cerebellar satellite” of the language network.

Still, the researchers think it’s unlikely that neurons in the cerebellum, which are organized very differently than those in the neocortex, replicate the precise function of other parts of the language network. Fedorenko’s team plans to explore the function of this satellite region more deeply, investigating whether it may participate in different kinds of tasks.

The researchers are also exploring the possibility that the cerebellum is particularly important for language learning—playing an outsized role during development or when people learn languages later in life.

Fedorenko says the discovery may also have implications for treating language impairments caused when an injury or disease damages the brain’s neocortical language network. “This area may provide a very interesting potential target to help recovery from aphasia,” Fedorenko says. Currently, researchers are exploring the possibility that non-invasively stimulating language-associated parts of the brain might promote language recovery. “This right cerebellar region may be just the right thing to potentially stimulate to up-regulate some of that function that’s lost,” Fedorenko says.