Music in the brain

For the first time, scientists identify a neural population highly selective for music.

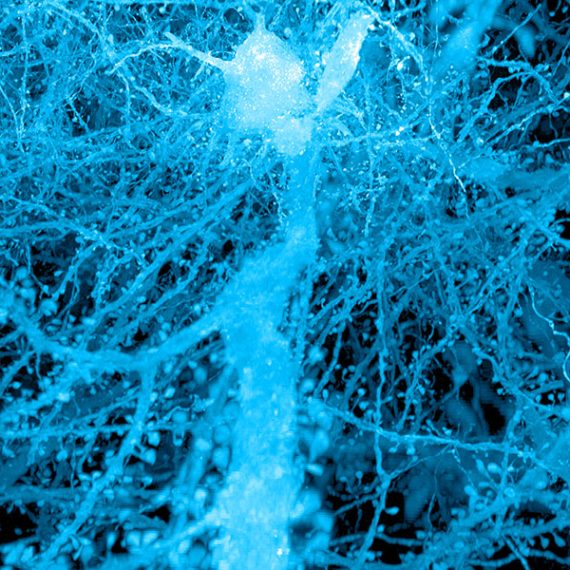

Scientists have long wondered if the human brain contains neural mechanisms specific to music perception. Now, for the first time, MIT neuroscientists have identified a neural population in the human auditory cortex that responds selectively to sounds that people typically categorize as music, but not to speech or other environmental sounds.

“It has been the subject of widespread speculation,” says Josh McDermott, the Frederick A. and Carole J. Middleton Assistant Professor of Neuroscience in the Department of Brain and Cognitive Sciences at MIT. “One of the core debates surrounding music is to what extent it has dedicated mechanisms in the brain and to what extent it piggybacks off of mechanisms that primarily serve other functions.”

The finding was enabled by a new method designed to identify neural populations from functional magnetic resonance imaging (fMRI) data. Using this method, the researchers identified six neural populations with different functions, including the music-selective population and another set of neurons that responds selectively to speech.

“The music result is notable because people had not been able to clearly see highly selective responses to music before,” says Sam Norman-Haignere, a postdoc at MIT’s McGovern Institute for Brain Research.

“Our findings are hard to reconcile with the idea that music piggybacks entirely on neural machinery that is optimized for other functions, because the neural responses we see are highly specific to music,” says Nancy Kanwisher, the Walter A. Rosenblith Professor of Cognitive Neuroscience at MIT and a member of MIT’s McGovern Institute for Brain Research.

Norman-Haignere is the lead author of a paper describing the findings in the Dec. 16 online edition of Neuron. McDermott and Kanwisher are the paper’s senior authors.

Mapping responses to sound

For this study, the researchers scanned the brains of 10 human subjects listening to 165 natural sounds, including different types of speech and music, as well as everyday sounds such as footsteps, a car engine starting, and a telephone ringing.

The brain’s auditory system has proven difficult to map, in part because of the coarse spatial resolution of fMRI, which measures blood flow as an index of neural activity. In fMRI, “voxels” — the smallest unit of measurement — reflect the response of hundreds of thousands or millions of neurons.

“As a result, when you measure raw voxel responses you’re measuring something that reflects a mixture of underlying neural responses,” Norman-Haignere says.

To tease apart these responses, the researchers used a technique that models each voxel as a mixture of multiple underlying neural responses. Using this method, they identified six neural populations, each with a unique response pattern to the sounds in the experiment, that best explained the data.

“What we found is we could explain a lot of the response variation across tens of thousands of voxels with just six response patterns,” Norman-Haignere says.

One population responded most to music, another to speech, and the other four to different acoustic properties such as pitch and frequency.

The key to this advance is the researchers’ new approach to analyzing fMRI data, says Josef Rauschecker, a professor of physiology and biophysics at Georgetown University.

“The whole field is interested in finding specialized areas like those that have been found in the visual cortex, but the problem is the voxel is just not small enough. You have hundreds of thousands of neurons in a voxel, and how do you separate the information they’re encoding? This is a study of the highest caliber of data analysis,” says Rauschecker, who was not part of the research team.

Layers of sound processing

The four acoustically responsive neural populations overlap with regions of “primary” auditory cortex, which performs the first stage of cortical processing of sound. Speech and music-selective neural populations lie beyond this primary region.

“We think this provides evidence that there’s a hierarchy of processing where there are responses to relatively simple acoustic dimensions in this primary auditory area. That’s followed by a second stage of processing that represents more abstract properties of sound related to speech and music,” Norman-Haignere says.

The researchers believe there may be other brain regions involved in processing music, including its emotional components. “It’s inappropriate at this point to conclude that this is the seat of music in the brain,” McDermott says. “This is where you see most of the responses within the auditory cortex, but there’s a lot of the brain that we didn’t even look at.”

Kanwisher also notes that “the existence of music-selective responses in the brain does not imply that the responses reflect an innate brain system. An important question for the future will be how this system arises in development: How early it is found in infancy or childhood, and how dependent it is on experience?”

The researchers are now investigating whether the music-selective population identified in this study contains subpopulations of neurons that respond to different aspects of music, including rhythm, melody, and beat. They also hope to study how musical experience and training might affect this neural population.