The 2019 Warren Alpert Foundation Prize has been awarded to four scientists, including Ed Boyden, for pioneering work that launched the field of optogenetics, a technique that uses light-sensitive channels and pumps to control the activity of neurons in the brain with a flick of a switch. He receives the prize alongside Karl Deisseroth, Peter Hegemann, and Gero Miesenböck, as outlined by The Warren Alpert Foundation in their announcement.

Harnessing light and genetics, the approach illuminates and modulates the activity of neurons, enables study of brain function and behavior, and helps reveal activity patterns that can overcome brain diseases.

Boyden’s work was key to envisioning and developing optogenetics, now a core method in neuroscience. The method allows brain circuits linked to complex behavioral processes, such as those involved in decision-making, feeding, and sleep, to be unraveled in genetic models. It is also helping to elucidate the mechanisms underlying neuropsychiatric disorders, and has the potential to inspire new strategies to overcome brain disorders.

“It is truly an honor to be included among the extremely distinguished list of winners of the Alpert Award,” says Boyden, the Y. Eva Tan Professor in Neurotechnology at the McGovern Institute, MIT. “To me personally, it is exciting to see the relatively new field of neurotechnology recognized. The brain implements our thoughts and feelings. It makes us who we are. This mysteries and challenge requires new technologies to make the brain understandable and repairable. It is a great honor that our technology of optogenetics is being thus recognized.”

While they were students, Boyden, and fellow awardee Karl Deisseroth, brainstormed about how microbial opsins could be used to mediate optical control of neural activity. In mid-2004, the pair collaborated to show that microbial opsins can be used to optically control neural activity. Upon launching his lab at MIT, Boyden’s team developed the first optogenetic silencing tool, the first effective optogenetic silencing in live mammals, noninvasive optogenetic silencing, and single-cell optogenetic control.

“The discoveries made by this year’s four honorees have fundamentally changed the landscape of neuroscience,” said George Q. Daley, dean of Harvard Medical School. “Their work has enabled scientists to see, understand and manipulate neurons, providing the foundation for understanding the ultimate enigma—the human brain.”

Beyond optogenetics, Boyden has pioneered transformative technologies that image, record, and manipulate complex systems, including expansion microscopy, robotic patch clamping, and even shrinking objects to the nanoscale. He was elected this year to the ranks of the National Academy of Sciences, and selected as an HHMI Investigator. Boyden has received numerous awards for this work, including the 2018 Gairdner International Prize and the 2016 Breakthrough Prize in Life Sciences.

The Warren Alpert Foundation, in association with Harvard Medical School, honors scientists whose work has improved the understanding, prevention, treatment or cure of human disease. Prize recipients are selected by the foundation’s scientific advisory board, which is composed of distinguished biomedical scientists and chaired by the dean of Harvard Medical School. The honorees will share a $500,000 prize and will be recognized at a daylong symposium on Oct. 3 at Harvard Medical School.

Ed Boyden holds the titles of Investigator, McGovern Institute; Y. Eva Tan Professor in Neurotechnology at MIT; Leader, Synthetic Neurobiology Group, Media Lab; Associate Professor, Biological Engineering, Brain and Cognitive Sciences, Media Lab; Co-Director, MIT Center for Neurobiological Engineering; Member, MIT Center for Environmental Health Sciences, Computational and Systems Biology Initiative, and Koch Institute.

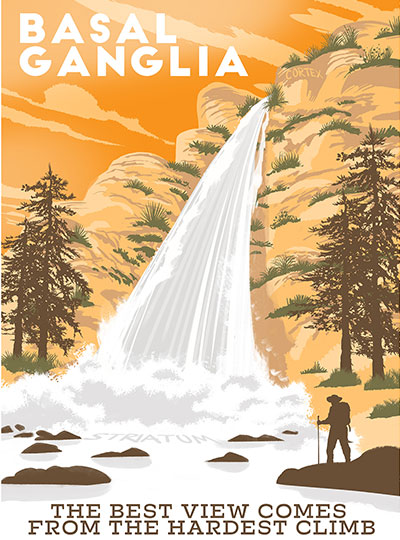

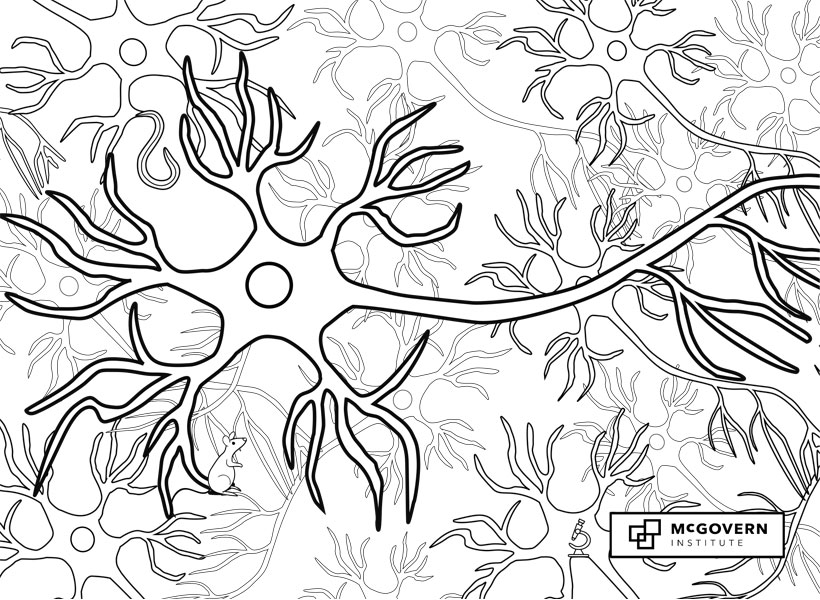

Signals flow through the nervous system from one neuron to the next across synapses.

Signals flow through the nervous system from one neuron to the next across synapses. The axon is the long, thin neural cable that carries electrical impulses called action potentials from the soma to synaptic terminals at downstream neurons.

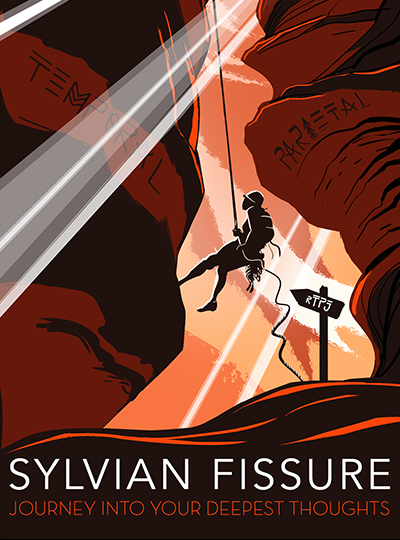

The axon is the long, thin neural cable that carries electrical impulses called action potentials from the soma to synaptic terminals at downstream neurons. The soma, or cell body, is the control center of the neuron, where the nucleus is located.

The soma, or cell body, is the control center of the neuron, where the nucleus is located. Long branching neuronal processes called dendrites receive synaptic inputs from thousands of other neurons and carry those signals to the cell body.

Long branching neuronal processes called dendrites receive synaptic inputs from thousands of other neurons and carry those signals to the cell body.