The School of Engineering is welcoming 17 new faculty members to its departments, institutes, labs, and centers. With research and teaching activities ranging from the development of robotics and machine learning technologies to modeling the impact of elevated carbon dioxide levels on vegetation, they are poised to make significant contributions in new directions across the school and to a wide range of research efforts around the Institute.

“I am delighted to welcome our wonderful new faculty,” says Anantha Chandrakasan, dean of the MIT School of Engineering and Vannevar Bush Professor of Electrical Engineering and Computer Science. “Their impact as talented educators, researchers, collaborators, and mentors will be felt across the School of Engineering and beyond as they strengthen our engineering community.”

Among the new faculty members are four from the Department of Electrical Engineering and Computer Science (EECS), which jointly reports into the School of Engineering and the MIT Stephen A. Schwarzman College of Computing.

Iwnetim “Tim” Abate will join the Department of Materials Science and Engineering in July 2023. He is currently both a Miller and Presidential Postdoctoral Fellow at the University of California at Berkeley. He received his MS and PhD in materials science and engineering from Stanford University and BS in physics from Minnesota State University at Moorhead. He also has research experience in industry (IBM) and at national labs (Los Alamos and SLAC National Accelerator Laboratories). Utilizing computational and experimental approaches in tandem, his research program at MIT will focus on the intersection of material chemistry, electrochemistry, and condensed matter physics to develop solutions for climate change and smart agriculture, including next-generation battery and sensor devices. Abate is also a co-founder and president of a nonprofit organization, SciFro Inc., working on empowering the African youth and underrepresented minorities in the United States to solve local problems through scientific research and innovation. He will continue working on expanding the vision and impact of SciFro with the MIT community. Abate received the Dan Cubicciotti Award of the Electrochemical Society, the EDGE and DARE graduate fellowships, the United Technologies Research Center fellowship, the John Stevens Jr. Memorial Award and the Justice, Equity, Diversity and Inclusion Graduation Award from Stanford University. He will hold the Toyota Career Development Professorship at MIT.

Kaitlyn Becker will join the Department of Mechanical Engineering as an assistant professor in August 2022. Becker received her PhD in materials science and mechanical engineering from Harvard University in 2021 and previously worked in industry as a manufacturing engineer at Cameron Health and a senior engineer for Nano Terra, Inc. She is a postdoc at the Harvard University School of Engineering and Applied Sciences and is also currently a senior glassblowing instructor in the Department of Materials Science and Engineering at MIT. Becker works on adaptive soft robots for grasping and manipulation of delicate structures from the desktop to the deep sea. Her research focuses on novel soft robotic platforms, adding functionality through innovations at the intersection of design and fabrication. She has developed novel fabrication methodologies and mechanical programming methods for large integrated arrays of soft actuators capable of collective manipulation and locomotion, and demonstrated integration of microfluidic circuits to control arrays of multichannel, two-degrees-of-freedom soft actuators. Becker received the National Science Foundation Graduate Research Fellowship in 2015, the Microsoft Graduate Women’s Scholarship in 2015, the Winston Chen Graduate Fellowship in 2015, and the Courtlandt S. Gross Memorial Scholarship in 2014.

Brandon J. DeKosky joined the Department of Chemical Engineering as an assistant professor in a newly introduced joint faculty position between the department and the Ragon Institute of MGH, MIT, and Harvard in September 2021. He received his BS in chemical engineering from University of Kansas and his PhD in chemical engineering from the University of Texas at Austin. He then did postdoctoral research at the Vaccine Research Center of the National Institute of Infectious Diseases. In 2017, Brandon launched his independent academic career as an assistant professor at the University of Kansas in a joint position with the Department of Chemical Engineering and the Department of Pharmaceutical Chemistry. He was also a member of the bioengineering graduate program. His research program focuses on developing and applying a suite of new high-throughput experimental and computational platforms for molecular analysis of adaptive immune responses, to accelerate precision drug discovery. He has received several notable recognitions, which include receipt of the NIH K99 Path to Independence and NIH DP5 Early Independence awards, the Cellular and Molecular Bioengineering Rising Star Award from the Biomedical Engineering Society, and the Career Development Award from the Congressionally Directed Medical Research Program’s Peer Reviewed Cancer Research Program.

Mohsen Ghaffari will join the Department of Electrical Engineering and Computer Science in April 2022. He received his BS from the Sharif University of Technology, and his MS and PhD in EECS from MIT. His research focuses on distributed and parallel algorithms for large graphs. Ghaffari received the ACM Doctoral Dissertation Honorable Mention Award, the ACM-EATCS Principles of Distributed Computing Doctoral Dissertation Award, and the George M. Sprowls Award for Best Computer Science PhD thesis at MIT. Before coming to MIT, he was on the faculty at ETH Zurich, where he received a prestigious European Research Council Starting Grant.

Aristide Gumyusenge joined the Department of Materials Science and Engineering in January. He is currently a postdoc at Stanford University working with Professor Zhenan Bao and Professor Alberto Salleo. He received a BS in chemistry from Wofford College in 2015 and a PhD in chemistry from Purdue University in 2019. His research background and interests are in semiconducting polymers, their processing and characterization, and their unique role in the future of electronics. Particularly, he has tackled longstanding challenges in operation stability of semiconducting polymers under extreme heat and has pioneered high-temperature plastic electronics. He has been selected as a PMSE Future Faculty Scholar (2021), the GLAM Postdoctoral Fellow (2020-22), and the MRS Arthur Nowick and Graduate Student Gold Awardee (2019), among other recognitions. At MIT, he will lead the Laboratory of Organic Materials for Smart Electronics (OMSE Lab). Through polymer design, novel processing strategies, and large-area manufacturing of electronic devices, he is interested in relating molecular design to device performance, especially transistor devices able to mimic and interface with biological systems. He will hold the Merton C. Flemings Career Development Professorship.

Mina Konakovic Lukovic will join the Department of Electrical Engineering and Computer Science as an assistant professor in July 2022. She received her BS and MS from the University of Belgrade, Faculty of Mathematics. She earned her PhD in 2019 in the School of Computer and Communication Sciences at the Swiss Federal Institute of Technology Lausanne, advised by Professor Mark Pauly. Currently a Schmidt Science Postdoctoral Fellow in MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), she has been mentored by Professor Wojciech Matusik. Her research focuses on computer graphics, computational fabrication, 3D geometry processing, and machine learning, including architectural geometry and the design of programmable materials. She received the ACM SIGGRAPH Outstanding Doctoral Dissertation Honorable Mention, the Eurographics PhD Award, and was recently awarded the 2021 SIAM Activity Group on Geometric Design Early Career Prize.

Darcy McRose will join the Department of Civil and Environmental Engineering as an assistant professor in August 2022. She completed a BS in Earth systems at Stanford and a PhD in geosciences at Princeton University. Darcy is currently conducting postdoctoral work at Caltech, where she is mentored by Professor Dianne Newman in the divisions of Biology and Biological Engineering and Geological and Planetary Sciences. Her research program focuses on microbe-environment interactions and their effects on biogeochemical cycles, and incorporates techniques ranging from microbial physiology and genetics to geochemistry. A particular emphasis for this work is the production and use of secondary metabolites and small molecules in soils and sediments. McRose received the Caltech BBE Division postdoctoral fellowship in 2019 and is currently a Simons Foundation Marine Microbial Ecology postdoctoral fellow as well as a L’Oréal USA for Women in Science fellow.

Qin (Maggie) Qi joined the Department of Chemical Engineering as an assistant professor in January 2022. She received two BS degrees in chemical engineering and in operations research from Cornell University, before moving on to Stanford for her PhD. She then took on a postdoc position at Harvard University School of Engineering and Applied Sciences and the Wyss Institute. Maggie’s proposed research includes combining extensive theoretical and computational work on predictive models that guide experimental design. She seeks to investigate particle-cell biomechanics and function for better targeted cell-based therapies. She also plans to design microphysiological systems that elucidate hydrodynamics in complex organs, including delivery of drugs to the eye, and to examine ionic liquids as complex fluids for biomaterial design. She aims to push the boundaries of fluid mechanics, transport phenomena, and soft matter for human health and to innovate precision health care solutions. Maggie received the T.S. Lo Graduate Fellowship and the Stanford Graduate Fellowship in Science and Engineering. Among her accomplishments, Maggie was a participant in the inaugural class of the MIT Rising Stars in ChemE Program in 2018.

Manish Raghavan will join the MIT Sloan School of Management and the Department of Electrical Engineering and Computer Science as an assistant professor in September 2022. He shares a joint appointment with the MIT Schwarzman College of Computing. He received a bachelor’s degree in electrical engineering and computer science from the University of California at Berkeley, and PhD from the Computer Science department at Cornell University. Prior to joining MIT, he was a postdoc at the Harvard Center for Research on Computation and Society. His research interests lie in the application of computational techniques to domains of social concern, including algorithmic fairness and behavioral economics, with a particular focus on the use of algorithmic tools in the hiring pipeline. He is also a member of Cornell’s Artificial Intelligence, Policy, and Practice initiative and Mechanism Design for Social Good.

Ritu Raman joined the Department of Mechanical Engineering as an assistant professor and Brit and Alex d’Arbeloff Career Development Chair in August 2021. Raman received her PhD in mechanical engineering from the University of Illinois at Urbana-Champaign as an NSF Graduate Research Fellow in 2016 and completed a postdoctoral fellowship with Professor Robert Langer at MIT, funded by a NASEM Ford Foundation Fellowship and a L’Oréal USA For Women in Science Fellowship. Raman’s lab designs adaptive living materials powered by assemblies of living cells for applications ranging from medicine to machines. Currently, she is focused on using biological materials and engineering tools to build living neuromuscular tissues. Her goal is to help restore mobility to those who have lost it after disease or trauma and to deploy biological actuators as functional components in machines. Raman published the book Biofrabrication with MIT Press in September 2021. She was in the MIT Technology Review “35 Innovators Under 35” 2019 class, the Forbes “30 Under 30” 2018 class, and has received numerous awards including being named a National Academy of Sciences Kavli Frontiers of Science Fellow in 2020 and receiving the Science and Sartorius Prize for Regenerative Medicine and Cell Therapy in 2019. Ritu has championed many initiatives to empower women in science, including being named an AAAS IF/THEN ambassador and founding the Women in Innovation and Stem Database at MIT (WISDM).

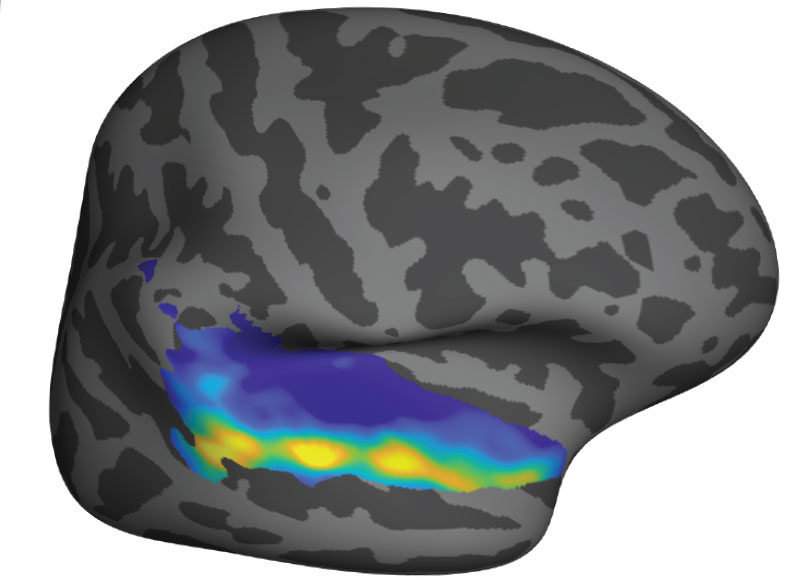

Nidhi Seethapathi joined the Department of Brain and Cognitive Sciences and the Department of Electrical Engineering and Computer Science in January 2022. She shares a joint appointment with the MIT Schwarzman College of Computing. She received a bachelor’s degree in mechanical engineering from Veermata Jijabai Technological Institute and a PhD from the Movement Lab at Ohio State University. Her research interests include building computational predictive models of human movement with applications to autonomous and robot-aided neuromotor rehabilitation. In her work, she uses a combination of tools and approaches from dynamics, control theory, and machine learning. During her PhD, she was a Schlumberger Foundation Faculty for the Future Fellow. She then worked as a postdoc in the Kording Lab at University of Pennsylvania, developing data-driven tools for autonomous neuromotor rehabilitation, in collaboration with the Rehabilitation Robotics Lab.

Vincent Sitzmann will join the Department of Electrical Engineering and Computer Science as an assistant professor in July 2022. He earned his BS from the Technical University of Munich in 2015, his MS from Stanford in 2017, and his PhD from Stanford in 2020. At MIT, he will be the principal investigator of the Scene Representation Group, where he will lead research at the intersection of machine learning, graphics, neural rendering, and computer vision to build algorithms that learn to reconstruct, understand, and interact with 3D environments from incomplete observations the way humans can. Currently, Vincent is a postdoc at the MIT Computer Science and Artificial Intelligence Laboratory with Josh Tenenbaum, Bill Freeman, and Fredo Durand. Along with multiple scholarships and fellowships, he has been recognized with the NeurIPS Honorable Mention: Outstanding New Directions in 2019.

Tess Smidt joined the Department of Electrical Engineering and Computer Science as an assistant professor in September 2021. She earned her SB in physics from MIT in 2012 and her PhD in physics from the University of California at Berkeley in 2018. She is the principal investigator of the Atomic Architects group at the Research Laboratory of Electronics, where she works at the intersection of physics, geometry, and machine learning to design algorithms that aid in the understanding and design of physical systems. Her research focuses on machine learning that incorporates physical and geometric constraints, with applications to materials design. Prior to joining the MIT EECS faculty, she was the 2018 Alvarez Postdoctoral Fellow in Computing Sciences at Lawrence Berkeley National Laboratory and a software engineering intern on the Google Accelerated Sciences team, where she developed Euclidean symmetry equivariant neural networks which naturally handle 3D geometry and geometric tensor data.

Loza Tadesse will join the Department of Mechanical Engineering as an assistant professor in July 2023. She received her PhD in bioengineering from Stanford University in 2021 and previously was a medical student at St. Paul Hospital Millennium Medical College in Ethiopia. She is currently a postdoc at the University of California at Berkeley. Tadesse’s past research combines Raman spectroscopy and machine learning to develop a rapid, all-optical, and label-free bacterial diagnostic and antibiotic susceptibility testing system that aims to circumvent the time-consuming culturing step in “gold standard” methods. She aims to establish a research program that develops next-generation point-of-care diagnostic devices using spectroscopy, optical, and machine learning tools for application in resource limited clinical settings such as developing nations, military sites, and space exploration. Tadesse has been listed as a 2022 Forbes “30 Under 30” in health care, received many awards including the Biomedical Engineering Society (BMES) Career Development Award, the Stanford DARE Fellowship and the Gates Foundation “Call to Action” $200,000 grant for SciFro Inc., an educational nonprofit in Ethiopia, which she co-founded.

César Terrer joined the Department of Civil and Environmental Engineering as an assistant professor in July 2021. He obtained his PhD in ecosystem ecology and climate change from Imperial College London, where he started working at the interface between experiments and models to better understand the effects of elevated carbon dioxide on vegetation. His research has advanced the understanding on the effects of carbon dioxide in terrestrial ecosystems, the role of soil nutrients in a climate change context, and plant-soil interactions. Synthesizing observational data from carbon dioxide experiments and satellites through meta-analysis and machine learning, César has found that microbial interactions between plants and soils play a major role in the carbon cycle at a global scale, affecting the speed of global warming.

Haruko Wainwright joined the Department of Nuclear Science and Engineering as an assistant professor in January 2021. She received her BEng in engineering physics from Kyoto University, Japan in 2003, her MS in nuclear engineering in 2006, her MA in statistics in 2010, and her PhD in nuclear engineering in 2010 from University of California at Berkeley. Before joining MIT, she was a staff scientist in the Earth and Environmental Sciences Area at Lawrence Berkeley National Laboratory and an adjunct professor in nuclear engineering at UC Berkeley. Her research focuses on environmental modeling and monitoring technologies, with a particular emphasis on nuclear waste and nuclear-related contamination. She has been developing Bayesian methods for multi-type multiscale data integration and model-data integration. She leads and co-leads multiple interdisciplinary projects, including the U.S. Department of Energy’s Advanced Long-term Environmental Monitoring Systems (ALTEMIS) project, and the Artificial Intelligence for Earth System Predictability (AI4ESP) initiative.

Martin Wainwright will join the Department of Electrical Engineering and Computer Science in July 2022. He received a bachelor’s degree in mathematics from University of Waterloo, Canada, and PhD in EECS from MIT. Prior to joining MIT, he was the Chancellor’s Professor at the University of California at Berkeley, with a joint appointment between the Department of Statistics and the Department of EECS. His research interests include high-dimensional statistics, statistical machine learning, information theory, and optimization theory. Among other awards, he has received the COPSS Presidents’ Award (2014) from the Joint Statistical Societies, the David Blackwell Lectureship (2017), and Medallion Lectureship (2013) from the Institute of Mathematical Statistics, and Best Paper awards from the IEEE Signal Processing Society and IEEE Information Theory Society. He was a Section Lecturer at the International Congress of Mathematicians in 2014.