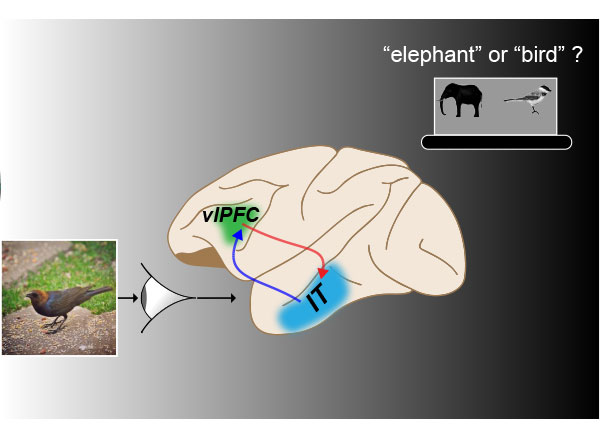

MIT researchers have identified a brain pathway critical in enabling primates to effortlessly identify objects in their field of vision. The findings enrich existing models of the neural circuitry involved in visual perception and help to further unravel the computational code for solving object recognition in the primate brain.

Led by Kohitij Kar, a postdoctoral associate at the McGovern Institute for Brain Research and Department of Brain and Cognitive Sciences, the study looked at an area called the ventrolateral prefrontal cortex (vlPFC), which sends feedback signals to the inferior temporal (IT) cortex via a network of neurons. The main goal of this study was to test how the back and forth information processing of this circuitry, that is, this recurrent neural network, is essential to rapid object identification in primates.

The current study, published in Neuron and available today via open access, is a follow-up to prior work published by Kar and James DiCarlo, Peter de Florez Professor of Neuroscience, the head of MIT’s Department of Brain and Cognitive Sciences, and an investigator in the McGovern Institute for Brain Research and the Center for Brains, Minds, and Machines.

Monkey versus machine

In 2019, Kar, DiCarlo, and colleagues identified that primates must use some recurrent circuits during rapid object recognition. Monkey subjects in that study were able to identify objects more accurately than engineered “feedforward” computational models, called deep convolutional neural networks, that lacked recurrent circuitry.

Interestingly, specific images for which models performed poorly compared to monkeys in object identification, also took longer to be solved in the monkeys’ brains — suggesting that the additional time might be due to recurrent processing in the brain. Based on the 2019 study, it remained unclear though exactly which recurrent circuits were responsible for the delayed information boost in the IT cortex. That’s where the current study picks up.

“In this new study, we wanted to find out: Where are these recurrent signals in IT coming from?” Kar said. “Which areas reciprocally connected to IT, are functionally the most critical part of this recurrent circuit?”

To determine this, researchers used a pharmacological agent to temporarily block the activity in parts of the vlPFC in macaques while they engaged in an object discrimination task. During these tasks, monkeys viewed images that contained an object, such as an apple, a car, or a dog; then, researchers used eye tracking to determine if the monkeys could correctly indicate what object they had previously viewed when given two object choices.

“We observed that if you use pharmacological agents to partially inactivate the vlPFC, then both the monkeys’ behavior and IT cortex activity deteriorates but more so for certain specific images. These images were the same ones we identified in the previous study — ones that were poorly solved by ‘feedforward’ models and took longer to be solved in the monkey’s IT cortex,” said Kar.

“These results provide evidence that this recurrently connected network is critical for rapid object recognition, the behavior we’re studying. Now, we have a better understanding of how the full circuit is laid out, and what are the key underlying neural components of this behavior.”

The full study, entitled “Fast recurrent processing via ventrolateral prefrontal cortex is needed by the primate ventral stream for robust core visual object recognition,” will run in print January 6, 2021.

“This study demonstrates the importance of pre-frontal cortical circuits in automatically boosting object recognition performance in a very particular way,” DiCarlo said. “These results were obtained in nonhuman primates and thus are highly likely to also be relevant to human vision.”

The present study makes clear the integral role of the recurrent connections between the vlPFC and the primate ventral visual cortex during rapid object recognition. The results will be helpful to researchers designing future studies that aim to develop accurate models of the brain, and to researchers who seek to develop more human-like artificial intelligence.