Yiming Chen ’24, Wilhem Hector, Anushka Nair, and David Oluigbo have been selected as 2025 Rhodes Scholars and will begin fully funded postgraduate studies at Oxford University in the U.K. next fall. In addition to MIT’s two U.S. Rhodes winners, Ouigbo and Nair, two affiliates were awarded international Rhodes Scholarships: Chen for Rhodes’ China constituency and Hector for the Global Rhodes Scholarship. Hector is the first Haitian citizen to be named a Rhodes Scholar.

The scholars were supported by Associate Dean Kim Benard and the Distinguished Fellowships team in Career Advising and Professional Development. They received additional mentorship and guidance from the Presidential Committee on Distinguished Fellowships.

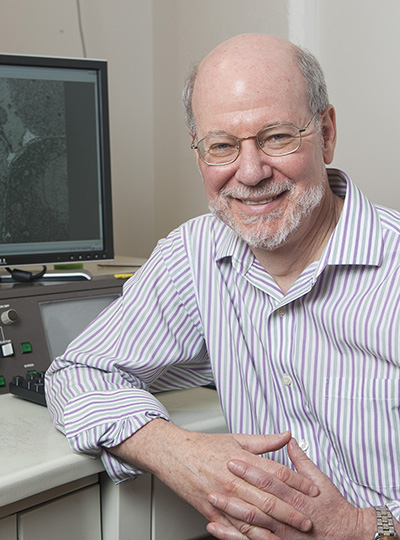

“It is profoundly inspiring to work with our amazing students, who have accomplished so much at MIT and, at the same time, thought deeply about how they can have an impact in solving the world’s major challenges,” says Professor Nancy Kanwisher who co-chairs the committee along with Professor Tom Levenson. “These students have worked hard to develop and articulate their vision and to learn to communicate it to others with passion, clarity, and confidence. We are thrilled but not surprised to see so many of them recognized this year as finalists and as winners.

Yiming Chen ’24

Yiming Chen, from Beijing, China, and the Washington area, was named one of four Rhodes China Scholars on Sept 28. At Oxford, she will pursue graduate studies in engineering science, working toward her ongoing goal of advancing AI safety and reliability in clinical workflows.

Chen graduated from MIT in 2024 with a BS in mathematics and computer science and an MEng in computer science. She worked on several projects involving machine learning for health care, and focused her master’s research on medical imaging in the Medical Vision Group of the Computer Science and Artificial Intelligence Laboratory (CSAIL).

Collaborating with IBM Research, Chen developed a neural framework for clinical-grade lumen segmentation in intravascular ultrasound and presented her findings at the MICCAI Machine Learning in Medical Imaging conference. Additionally, she worked at Cleanlab, an MIT-founded startup, creating an open-source library to ensure the integrity of image datasets used in vision tasks.

Chen was a teaching assistant in the MIT math and electrical engineering and computer science departments, and received a teaching excellence award. She taught high school students at the Hampshire College Summer Studies in Math and was selected to participate in MISTI Global Teaching Labs in Italy.

Having studied the guzheng, a traditional Chinese instrument, since age 4, Chen served as president of the MIT Chinese Music Ensemble, explored Eastern and Western music synergies with the MIT Chamber Music Society, and performed at the United Nations. On campus, she was also active with Asymptones a capella, MIT Ring Committee, Ribotones, Figure Skating Club, and the Undergraduate Association Innovation Committee.

Wilhem Hector

Wilhem Hector, a senior from Port-au-Prince, Haiti, majoring in mechanical engineering, was awarded a Global Rhodes Scholarship on Nov 1. The first Haitian national to be named a Rhodes Scholar, Hector will pursue at Oxford a master’s in energy systems followed by a master’s in education, focusing on digital and social change. His long-term goals are twofold: pioneering Haiti’s renewable energy infrastructure and expanding hands-on opportunities in the country‘s national curriculum.

Hector developed his passion for energy through his research in the MIT Howland Lab, where he investigated the uncertainty of wind power production during active yaw control. He also helped launch the MIT Renewable Energy Clinic through his work on the sources of opposition to energy projects in the U.S. Beyond his research, Hector had notable contributions as an intern at Radia Inc. and DTU Wind Energy Systems, where he helped develop computational wind farm modeling and simulation techniques.

Outside of MIT, he leads the Hector Foundation, a nonprofit providing educational opportunities to young people in Haiti. He has raised over $80,000 in the past five years to finance their initiatives, including the construction of Project Manus, Haiti’s first open-use engineering makerspace. Hector’s service endeavors have been supported by the MIT PKG Center, which awarded him the Davis Peace Prize, the PKG Fellowship for Social Impact, and the PKG Award for Public Service.

Hector co-chairs both the Student Events Board and the Class of 2025 Senior Ball Committee and has served as the social chair for Chocolate City and the African Students Association.

Anushka Nair

Anushka Nair, from Portland, Oregon, will graduate next spring with BS and MEng degrees in computer science and engineering with concentrations in economics and AI. She plans to pursue a DPhil in social data science at the Oxford Internet Institute. Nair aims to develop ethical AI technologies that address pressing societal challenges, beginning with combating misinformation.

For her master’s thesis under Professor David Rand, Nair is developing LLM-powered fact-checking tools to detect nuanced misinformation beyond human or automated capabilities. She also researches human-AI co-reasoning at the MIT Center for Collective Intelligence with Professor Thomas Malone. Previously, she conducted research on autonomous vehicle navigation at Stanford’s AI and Robotics Lab, energy microgrid load balancing at MIT’s Institute for Data, Systems, and Society, and worked with Professor Esther Duflo in economics.

Nair interned in the Executive Office of the Secretary General at the United Nations, where she integrated technology solutions and assisted with launching the High-Level Advisory Body on AI. She also interned in Tesla’s energy sector, contributing to Autobidder, an energy trading tool, and led the launch of a platform for monitoring distributed energy resources and renewable power plants. Her work has earned her recognition as a Social and Ethical Responsibilities of Computing Scholar and a U.S. Presidential Scholar.

Nair has served as President of the MIT Society of Women Engineers and MIT and Harvard Women in AI, spearheading outreach programs to mentor young women in STEM fields. She also served as president of MIT Honors Societies Eta Kappa Nu and Tau Beta Pi.

David Oluigbo

David Oluigbo, from Washington, is a senior majoring in artificial intelligence and decision making and minoring in brain and cognitive sciences. At Oxford, he will undertake an MSc in applied digital health followed by an MSc in modeling for global health. Afterward, Oluigbo plans to attend medical school with the goal of becoming a physician-scientist who researches and applies AI to address medical challenges in low-income countries.

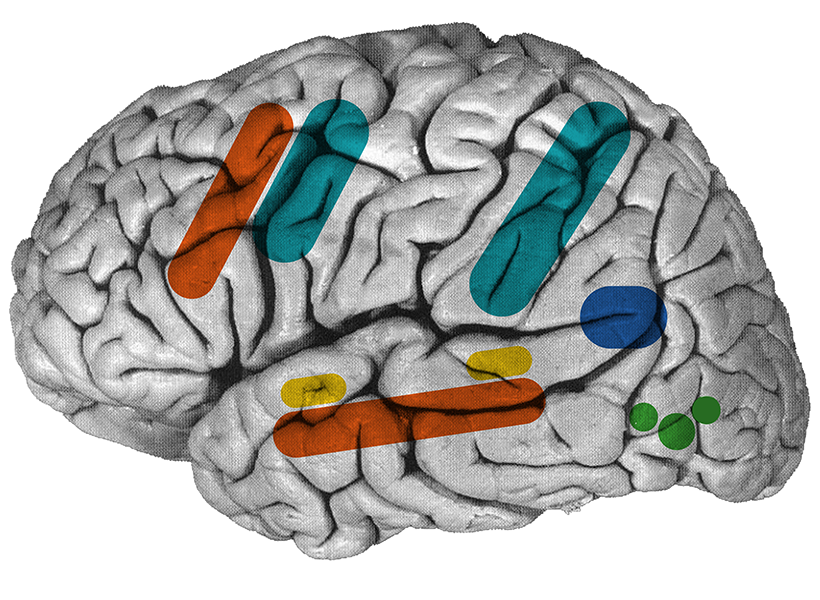

Since his first year at MIT, Oluigbo has conducted neural and brain research with Ev Fedorenko at the McGovern Institute for Brain Research and with Susanna Mierau’s Synapse and Network Development Group at Brigham and Women’s Hospital. His work with Mierau led to several publications and a poster presentation at the Federation of European Societies annual meeting.

In a summer internship at the National Institutes of Health Clinical Center, Oluigbo designed and trained machine-learning models on CT scans for automatic detection of neuroendocrine tumors, leading to first authorship on an International Society for Optics and Photonics conference proceeding paper, which he presented at the 2024 annual meeting. Oluigbo also did a summer internship with the Anyscale Learning for All Laboratory at the MIT Computer Science and Artificial Intelligence Laboratory.

Oluigbo is an EMT and systems administrator officer with MIT-EMS. He is a consultant for Code for Good, a representative on the MIT Schwarzman College of Computing Undergraduate Advisory Group, and holds executive roles with the Undergraduate Association, the MIT Brain and Cognitive Society, and the MIT Running Club.