Within a single cell, thousands of molecules, such as proteins, ions, and other signaling molecules, work together to perform all kinds of functions — absorbing nutrients, storing memories, and differentiating into specific tissues, among many others.

Deciphering these molecules, and all of their interactions, is a monumental task. Over the past 20 years, scientists have developed fluorescent reporters they can use to read out the dynamics of individual molecules within cells. However, typically only one or two such signals can be observed at a time, because a microscope cannot distinguish between many fluorescent colors.

MIT researchers have now developed a way to image up to five different molecule types at a time, by measuring each signal from random, distinct locations throughout a cell.

This approach could allow scientists to learn much more about the complex signaling networks that control most cell functions, says Edward Boyden, the Y. Eva Tan Professor in Neurotechnology and a professor of biological engineering, media arts and sciences, and brain and cognitive sciences at MIT.

“There are thousands of molecules encoded by the genome, and they’re interacting in ways that we don’t understand. Only by watching them at the same time can we understand their relationships,” says Boyden, who is also a member of MIT’s McGovern Institute for Brain Research and Koch Institute for Integrative Cancer Research.

In a new study, Boyden and his colleagues used this technique to identify two populations of neurons that respond to calcium signals in different ways, which may influence how they encode long-term memories, the researchers say.

Boyden is the senior author of the study, which appears today in Cell. The paper’s lead authors are MIT postdoc Changyang Linghu and graduate student Shannon Johnson.

Fluorescent clusters

To make molecular activity visible within a cell, scientists typically create reporters by fusing a protein that senses a target molecule to a protein that glows. “This is similar to how a smoke detector will sense smoke and then flash a light,” says Johnson, who is also a fellow in the Yang-Tan Center for Molecular Therapeutics. The most commonly used glowing protein is green fluorescent protein (GFP), which is based on a molecule originally found in a fluorescent jellyfish.

“Typically a biologist can see one or two colors at the same time on a microscope, and many of the reporters out there are green, because they’re based on the green fluorescent protein,” Boyden says. “What has been lacking until now is the ability to see more than a couple of these signals at once.”

“Just like listening to the sound of a single instrument from an orchestra is far from enough to fully appreciate a symphony,” Linghu says, “by enabling observations of multiple cellular signals at the same time, our technology will help us understand the ‘symphony’ of cellular activities.”

To boost the number of signals they could see, the researchers set out to identify signals by location instead of by color. They modified existing reporters to cause them to accumulate in clusters at different locations within a cell. They did this by adding two small peptides to each reporter, which helped the reporters form distinct clusters within cells.

“It’s like having reporter X be tethered to a LEGO brick, and reporter Z tethered to a K’NEX piece — only LEGO bricks will snap to other LEGO bricks, causing only reporter X to be clustered with more of reporter X,” Johnson says.

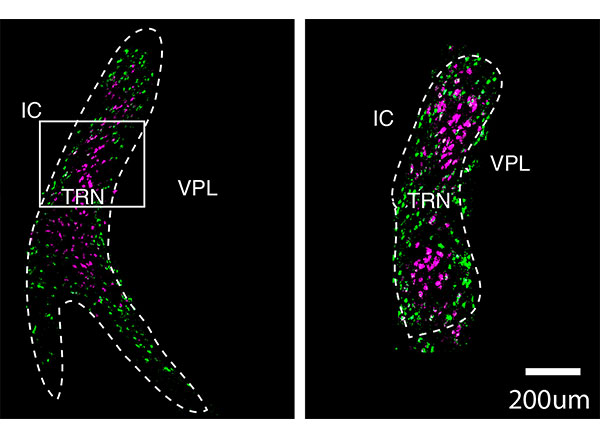

With this technique, each cell ends up with hundreds of clusters of fluorescent reporters. After measuring the activity of each cluster under a microscope, based on the changing fluorescence, the researchers can identify which molecule was being measured in each cluster by preserving the cell and staining for peptide tags that are unique to each reporter. The peptide tags are invisible in the live cell, but they can be stained and seen after the live imaging is done. This allows the researchers to distinguish signals for different molecules even though they may all be fluorescing the same color in the live cell.

Using this approach, the researchers showed that they could see five different molecular signals in a single cell. To demonstrate the potential usefulness of this strategy, they measured the activities of three molecules in parallel — calcium, cyclic AMP, and protein kinase A (PKA). These molecules form a signaling network that is involved with many different cellular functions throughout the body. In neurons, it plays an important role in translating a short-term input (from upstream neurons) into long-term changes such as strengthening the connections between neurons — a process that is necessary for learning and forming new memories.

Applying this imaging technique to pyramidal neurons in the hippocampus, the researchers identified two novel subpopulations with different calcium signaling dynamics. One population showed slow calcium responses. In the other population, neurons had faster calcium responses. The latter population had larger PKA responses. The researchers believe this heightened response may help sustain long-lasting changes in the neurons.

Imaging signaling networks

The researchers now plan to try this approach in living animals so they can study how signaling network activities relate to behavior, and also to expand it to other types of cells, such as immune cells. This technique could also be useful for comparing signaling network patterns between cells from healthy and diseased tissue.

In this paper, the researchers showed they could record five different molecular signals at once, and by modifying their existing strategy, they believe they could get up to 16. With additional work, that number could reach into the hundreds, they say.

“That really might help crack open some of these tough questions about how the parts of a cell work together,” Boyden says. “One might imagine an era when we can watch everything going on in a living cell, or at least the part involved with learning, or with disease, or with the treatment of a disease.”

The research was funded by the Friends of the McGovern Institute Fellowship; the J. Douglas Tan Fellowship; Lisa Yang; the Yang-Tan Center for Molecular Therapeutics; John Doerr; the Open Philanthropy Project; the HHMI-Simons Faculty Scholars Program; the Human Frontier Science Program; the U.S. Army Research Laboratory; the MIT Media Lab; the Picower Institute Innovation Fund; the National Institutes of Health, including an NIH Director’s Pioneer Award; and the National Science Foundation.