The human brain is very good at solving complicated problems. One reason for that is that humans can break problems apart into manageable subtasks that are easy to solve one at a time.

This allows us to complete a daily task like going out for coffee by breaking it into steps: getting out of our office building, navigating to the coffee shop, and once there, obtaining the coffee. This strategy helps us to handle obstacles easily. For example, if the elevator is broken, we can revise how we get out of the building without changing the other steps.

While there is a great deal of behavioral evidence demonstrating humans’ skill at these complicated tasks, it has been difficult to devise experimental scenarios that allow precise characterization of the computational strategies we use to solve problems.

In a new study, MIT researchers have successfully modeled how people deploy different decision-making strategies to solve a complicated task — in this case, predicting how a ball will travel through a maze when the ball is hidden from view. The human brain cannot perform this task perfectly because it is impossible to track all of the possible trajectories in parallel, but the researchers found that people can perform reasonably well by flexibly adopting two strategies known as hierarchical reasoning and counterfactual reasoning.

The researchers were also able to determine the circumstances under which people choose each of those strategies.

“What humans are capable of doing is to break down the maze into subsections, and then solve each step using relatively simple algorithms. Effectively, when we don’t have the means to solve a complex problem, we manage by using simpler heuristics that get the job done,” says Mehrdad Jazayeri, a professor of brain and cognitive sciences, a member of MIT’s McGovern Institute for Brain Research, an investigator at the Howard Hughes Medical Institute, and the senior author of the study.

Mahdi Ramadan PhD ’24 and graduate student Cheng Tang are the lead authors of the paper, which appears today in Nature Human Behavior. Nicholas Watters PhD ’25 is also a co-author.

Rational strategies

When humans perform simple tasks that have a clear correct answer, such as categorizing objects, they perform extremely well. When tasks become more complex, such as planning a trip to your favorite cafe, there may no longer be one clearly superior answer. And, at each step, there are many things that could go wrong. In these cases, humans are very good at working out a solution that will get the task done, even though it may not be the optimal solution.

Those solutions often involve problem-solving shortcuts, or heuristics. Two prominent heuristics humans commonly rely on are hierarchical and counterfactual reasoning. Hierarchical reasoning is the process of breaking down a problem into layers, starting from the general and proceeding toward specifics. Counterfactual reasoning involves imagining what would have happened if you had made a different choice. While these strategies are well-known, scientists don’t know much about how the brain decides which one to use in a given situation.

“This is really a big question in cognitive science: How do we problem-solve in a suboptimal way, by coming up with clever heuristics that we chain together in a way that ends up getting us closer and closer until we solve the problem?” Jazayeri says.

To overcome this, Jazayeri and his colleagues devised a task that is just complex enough to require these strategies, yet simple enough that the outcomes and the calculations that go into them can be measured.

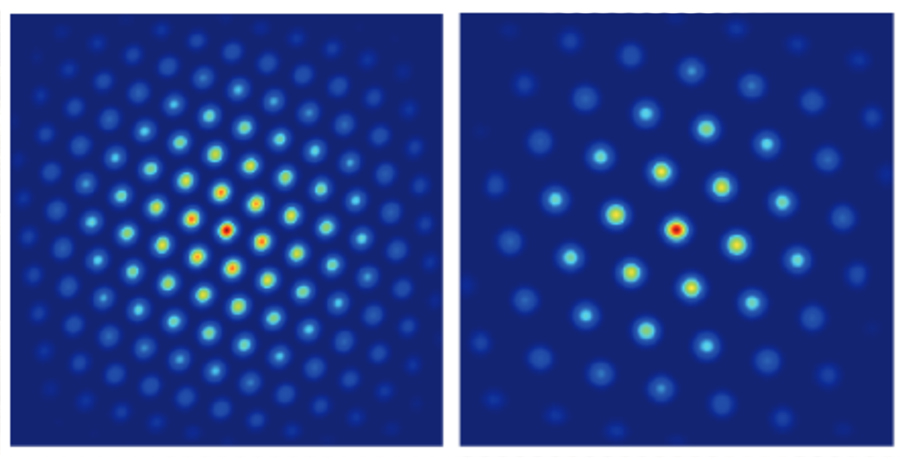

The task requires participants to predict the path of a ball as it moves through four possible trajectories in a maze. Once the ball enters the maze, people cannot see which path it travels. At two junctions in the maze, they hear an auditory cue when the ball reaches that point. Predicting the ball’s path is a task that is impossible for humans to solve with perfect accuracy.

“It requires four parallel simulations in your mind, and no human can do that. It’s analogous to having four conversations at a time,” Jazayeri says. “The task allows us to tap into this set of algorithms that the humans use, because you just can’t solve it optimally.”

The researchers recruited about 150 human volunteers to participate in the study. Before each subject began the ball-tracking task, the researchers evaluated how accurately they could estimate timespans of several hundred milliseconds, about the length of time it takes the ball to travel along one arm of the maze.

For each participant, the researchers created computational models that could predict the patterns of errors that would be seen for that participant (based on their timing skill) if they were running parallel simulations, using hierarchical reasoning alone, counterfactual reasoning alone, or combinations of the two reasoning strategies.

The researchers compared the subjects’ performance with the models’ predictions and found that for every subject, their performance was most closely associated with a model that used hierarchical reasoning but sometimes switched to counterfactual reasoning.

That suggests that instead of tracking all the possible paths that the ball could take, people broke up the task. First, they picked the direction (left or right), in which they thought the ball turned at the first junction, and continued to track the ball as it headed for the next turn. If the timing of the next sound they heard wasn’t compatible with the path they had chosen, they would go back and revise their first prediction — but only some of the time.

Switching back to the other side, which represents a shift to counterfactual reasoning, requires people to review their memory of the tones that they heard. However, it turns out that these memories are not always reliable, and the researchers found that people decided whether to go back or not based on how good they believed their memory to be.

“People rely on counterfactuals to the degree that it’s helpful,” Jazayeri says. “People who take a big performance loss when they do counterfactuals avoid doing them. But if you are someone who’s really good at retrieving information from the recent past, you may go back to the other side.”

Human limitations

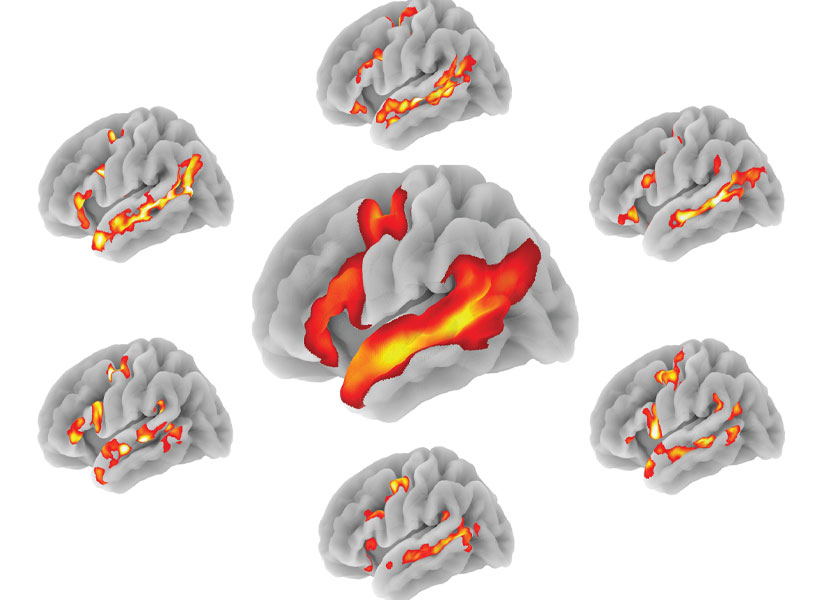

To further validate their results, the researchers created a machine-learning neural network and trained it to complete the task. A machine-learning model trained on this task will track the ball’s path accurately and make the correct prediction every time, unless the researchers impose limitations on its performance.

When the researchers added cognitive limitations similar to those faced by humans, they found that the model altered its strategies. When they eliminated the model’s ability to follow all possible trajectories, it began to employ hierarchical and counterfactual strategies like humans do. If the researchers reduced the model’s memory recall ability, it began to switch to hierarchical only if it thought its recall would be good enough to get the right answer — just as humans do.

“What we found is that networks mimic human behavior when we impose on them those computational constraints that we found in human behavior,” Jazayeri says. “This is really saying that humans are acting rationally under the constraints that they have to function under.”

By slightly varying the amount of memory impairment programmed into the models, the researchers also saw hints that the switching of strategies appears to happen gradually, rather than at a distinct cut-off point. They are now performing further studies to try to determine what is happening in the brain as these shifts in strategy occur.

The research was funded by a Lisa K. Yang ICoN Fellowship, a Friends of the McGovern Institute Student Fellowship, a National Science Foundation Graduate Research Fellowship, the Simons Foundation, the Howard Hughes Medical Institute, and the McGovern Institute.