Hearing through the clatter

A new study explains how the brain extracts meaningful sound from noise.

In a busy coffee shop, our eardrums are inundated with sound waves – people chatting, the clatter of cups, music playing – yet our brains somehow manage to untangle relevant sounds, like a barista announcing that our “coffee is ready,” from insignificant noise. A new McGovern Institute study sheds light on how the brain accomplishes the task of extracting meaningful sounds from background noise – findings that could one day help to build artificial hearing systems and aid development of targeted hearing prosthetics.

“These findings reveal a neural correlate of our ability to listen in noise, and at the same time demonstrate functional differentiation between different stages of auditory processing in the cortex,” explains Josh McDermott, an associate professor of brain and cognitive sciences at MIT, a member of the McGovern Institute and the Center for Brains, Minds and Machines, and the senior author of the study.

The auditory cortex, a part of the brain that responds to sound, has long been known to have distinct anatomical subregions, but the role these areas play in auditory processing has remained a mystery. In their study published today in Nature Communications, McDermott and former graduate student Alex Kell, discovered that these subregions respond differently to the presence of background noise, suggesting that auditory processing occurs in steps that progressively hone in on and isolate a sound of interest.

Background check

Previous studies have shown that the primary and non-primary subregions of the auditory cortex respond to sound with different dynamics, but these studies were largely based on brain activity in response to speech or simple synthetic sounds (such as tones and clicks). Little was known about how these regions might work to subserve everyday auditory behavior.

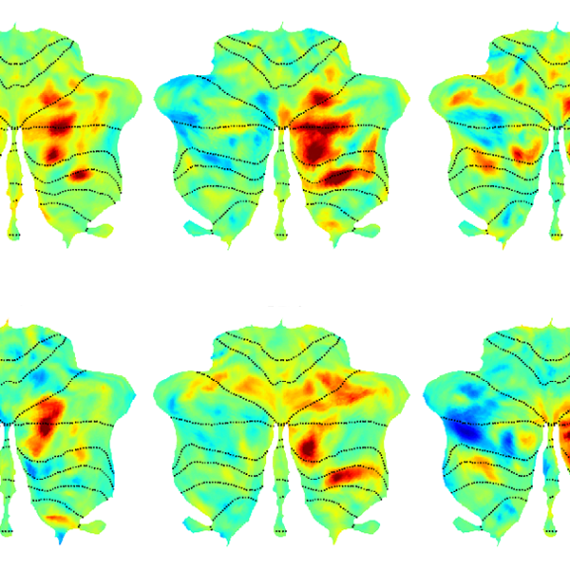

To test these subregions under more realistic conditions, McDermott and Kell, who is now a postdoctoral researcher at Columbia University, assessed changes in human brain activity while subjects listened to natural sounds with and without background noise.

While lying in an MRI scanner, subjects listened to 30 different natural sounds, ranging from meowing cats to ringing phones, that were presented alone or embedded in real-world background noise such as heavy rain.

“When I started studying audition,” explains Kell, “I started just sitting around in my day-to-day life, just listening, and was astonished at the constant background noise that seemed to usually be filtered out by default. Most of these noises tended to be pretty stable over time, suggesting we could experimentally separate them. The project flowed from there.”

To their surprise, Kell and McDermott found that the primary and non-primary regions of the auditory cortex responded differently to natural sound depending upon whether background noise was present.

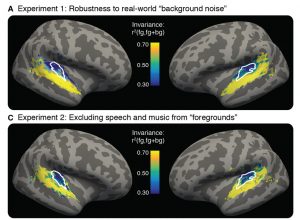

They found that activity of the primary auditory cortex was altered when background noise is present, suggesting that this region has not yet differentiated between meaningful sounds and background noise. Non-primary regions, however, respond similarly to natural sounds irrespective of whether noise is present, suggesting that cortical signals generated by sound are transformed or “cleaned up” to remove background noise by the time they reach the non-primary auditory cortex.

“We were surprised by how big the difference was between primary and non-primary areas,” explained Kell, “so we ran a bunch more subjects but kept seeing the same thing. We had a ton of questions about what might be responsible for this difference, and that’s why we ended up running all these follow-up experiments.”

A general principle

Kell and McDermott went on to test whether these responses were specific to particular sounds, and discovered that the above effect remained stable no matter the source or type of sound activity. Music, speech, or a squeaky toy, all activated the non-primary cortex region similarly, whether or not background noise was present.

The authors also tested whether attention is relevant. Even when the researchers sneakily distracted subjects with a visual task in the scanner, the cortical subregions responded to meaningful sound and background noise in the same way, showing that attention is not driving this aspect of sound processing. In other words, even when we are focused on reading a book, our brain is diligently sorting the sound of our meowing cat from the patter of heavy rain outside.

Future directions

The McDermott lab is now building computational models of the so-called “noise robustness” found in the Nature Communications study and Kell is pursuing a finer-grained understanding of sound processing in his postdoctoral work at Columbia, by exploring the neural circuit mechanisms underlying this phenomenon.

By gaining a deeper understanding of how the brain processes sound, the researchers hope their work will contribute to improve diagnoses and treatment of hearing dysfunction. Such research could help to reveal the origins of listening difficulties that accompany developmental disorders or age-related hearing loss. For instance, if hearing loss results from dysfunction in sensory processing, this could be caused by abnormal noise robustness in the auditory cortex. Normal noise robustness might instead suggest that there are impairments elsewhere in the brain, for example a break down in higher executive function.

“In the future,” McDermott says, “we hope these noninvasive measures of auditory function may become valuable tools for clinical assessment.”