The core components of CRISPR-based genome-editing therapies are bacterial proteins called nucleases that can stimulate unwanted immune responses in people, increasing the chances of side effects and making these therapies potentially less effective.

Researchers at the Broad Institute of MIT and Harvard and Cyrus Biotechnology have now engineered two CRISPR nucleases, Cas9 and Cas12, to mask them from the immune system. The team identified protein sequences on each nuclease that trigger the immune system and used computational modeling to design new versions that evade immune recognition. The engineered enzymes had similar gene-editing efficiency and reduced immune responses compared to standard nucleases in mice.

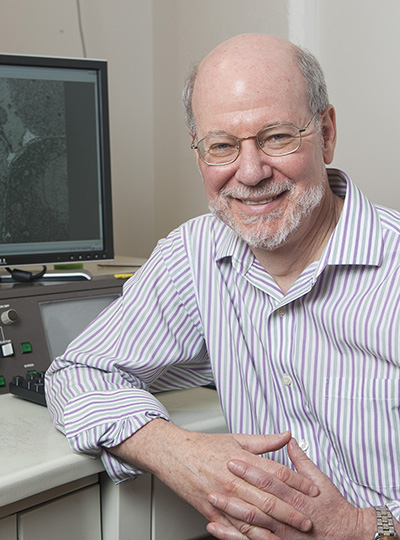

Appearing today in Nature Communications, the findings could help pave the way for safer, more efficient gene therapies. The study was led by Feng Zhang, a core institute member at the Broad and an Investigator at the McGovern Institute for Brain Research at MIT.

“As CRISPR therapies enter the clinic, there is a growing need to ensure that these tools are as safe as possible, and this work tackles one aspect of that challenge,” said Zhang, who is also a co-director of the K. Lisa Yang and Hock E. Tan Center for Molecular Therapeutics, the James and Patricia Poitras Professor of Neuroscience, and a professor at MIT. He is an Investigator at the Howard Hughes Medical Institute.

Rumya Raghavan, a graduate student in Zhang’s lab when the study began, and Mirco Julian Friedrich, a postdoctoral scholar in Zhang’s lab, were co-first authors on the study.

“People have known for a while that Cas9 causes an immune response, but we wanted to pinpoint which parts of the protein were being recognized by the immune system and then engineer the proteins to get rid of those parts while retaining its function,” said Raghavan.

“Our goal was to use this information to create not only a safer therapy, but one that is potentially even more effective because it is not being eliminated by the immune system before it can do its job,” added Friedrich.

In search of immune triggers

Many CRISPR-based therapies use nucleases derived from bacteria. About 80 percent of people have pre-existing immunity to these proteins through everyday exposure to these bacteria, but scientists didn’t know which parts of the nucleases the immune system recognized.

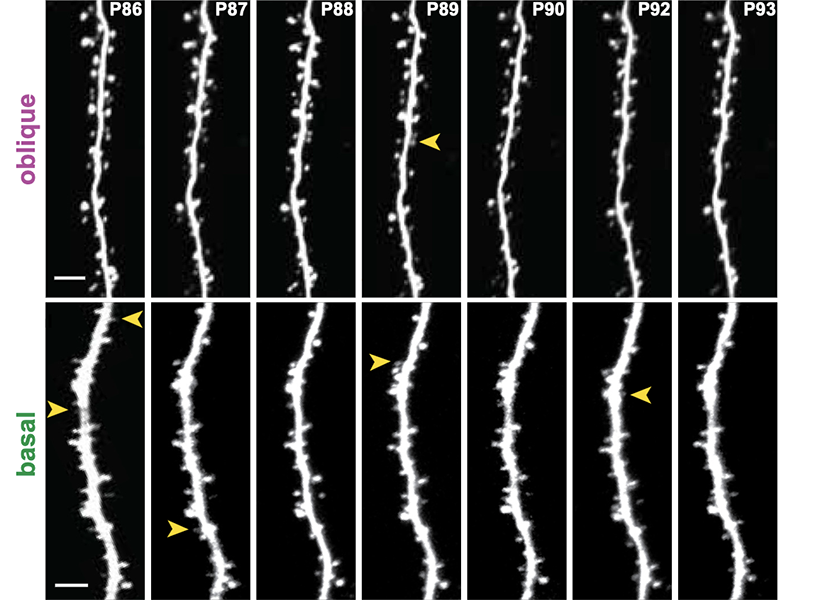

To find out, Zhang’s team used a specialized type of mass spectrometry to identify and analyze the Cas9 and Cas 12 protein fragments recognized by immune cells. For each of two nucleases — Cas9 from Streptococcus pyogenes and Cas12 from Staphylococcus aureus — they identified three short sequences, about eight amino acids long, that evoked an immune response. They then partnered with Cyrus Biotechnology, a company co-founded by University of Washington biochemist David Baker that develops structure-based computational tools to design proteins that evade the immune response. After Zhang’s team identified immunogenic sequences in Cas9 and Cas12, Cyrus used these computational approaches to design versions of the nucleases that did not include the immune-triggering sequences.

Zhang’s lab used prediction software to validate that the new nucleases were less likely to trigger immune responses. Next, the team engineered a panel of new nucleases informed by these predictions and tested the most promising candidates in human cells and in mice that were genetically modified to bear key components of the human immune system. In both cases, they found that the engineered enzymes resulted in significantly reduced immune responses compared to the original nucleases, but still cut DNA at the same efficiency.

Minimally immunogenic nucleases are just one part of safer gene therapies, Zhang’s team says. In the future, they hope their methods may also help scientists design delivery vehicles to evade the immune system.

This study was funded in part by the Poitras Center for Psychiatric Disorders Research, the K. Lisa. Yang and Hock E. Tan Center for Molecular Therapeutics in Neuroscience and the Hock E. Tan and K. Lisa Yang Center for Autism Research at MIT.