James DiCarlo, the Peter de Florez Professor of Neuroscience, has been appointed to the role of director of the MIT Quest for Intelligence. MIT Quest was launched in 2018 to discover the basis of natural intelligence, create new foundations for machine intelligence, and deliver new tools and technologies for humanity.

As director, DiCarlo will forge new collaborations with researchers within MIT and beyond to accelerate progress in understanding intelligence and developing the next generation of intelligence tools.

“We have discovered and developed surprising new connections between natural and artificial intelligence,” says DiCarlo, currently head of the Department of Brain and Cognitive Sciences (BCS). “The scientific understanding of natural intelligence, and advances in building artificial intelligence with positive real-world impact, are interlocked aspects of a unified, collaborative grand challenge, and MIT must continue to lead the way.”

Aude Oliva, senior research scientist at the Computer Science and Artificial Intelligence Laboratory (CSAIL) and the MIT director of the MIT-IBM Watson AI Lab, will lead industry engagements as director of MIT Quest Corporate. Nicholas Roy, professor of aeronautics and astronautics and a member of CSAIL, will lead the development of systems to deliver on the mission as director of MIT Quest Systems Engineering. Daniel Huttenlocher, dean of the MIT Schwarzman College of Computing, will serve as chair of MIT Quest.

“The MIT Quest’s leadership team has positioned this initiative to spearhead our understanding of natural and artificial intelligence, and I am delighted that Jim is taking on this role,” says Huttenlocher, the Henry Ellis Warren (1894) Professor of Electrical Engineering and Computer Science.

DiCarlo will step down from his current role as head of BCS, a position he has held for nearly nine years, and will continue as faculty in BCS and as an investigator in the McGovern Institute for Brain Research.

“Jim has been a highly productive leader for his department, the School of Science, and the Institute at large. I’m excited to see the impact he will make in this new role,” says Nergis Mavalvala, dean of the School of Science and the Curtis and Kathleen Marble Professor of Astrophysics.

As department head, DiCarlo oversaw significant progress in the department’s scientific and educational endeavors. Roughly a quarter of current BCS faculty were hired on his watch, strengthening the department’s foundations in cognitive, systems, and cellular and molecular brain science. In addition, DiCarlo developed a new departmental emphasis in computation, deepening BCS’s ties with the MIT Schwarzman College of Computing and other MIT units such as the Center for Brains, Minds and Machines. He also developed and leads an NIH-funded graduate training program in computationally-enabled integrative neuroscience. As a result, BCS is one of the few departments in the world that is attempting to decipher, in engineering terms, how the human mind emerges from the biological components of the brain.

To prepare students for this future, DiCarlo collaborated with BCS Associate Department Head Michale Fee to design and execute a total overhaul of the Course 9 curriculum. In addition, partnering with the Department of Electrical Engineering and Computer Science, BCS developed a new major, Course 6-9 (Computation and Cognition), to fill the rapidly growing interest in this interdisciplinary topic. In only its second year, Course 6-9 already has more than 100 undergraduate majors.

DiCarlo has also worked tirelessly to build a more open, connected, and supportive culture across the entire BCS community in Building 46. In this work, as in everything, DiCarlo sought to bring people together to address challenges collaboratively. He attributes progress to strong partnerships with Li-Huei Tsai, the Picower Professor of Neuroscience in BCS and director of the Picower Institute for Learning and Memory; Robert Desimone, the Doris and Don Berkey Professor in BCS and director of the McGovern Institute for Brain Research; and to the work of dozens of faculty and staff. For example, in collaboration with associate department head Professor Rebecca Saxe, the department has focused on faculty mentorship of graduate students, and, in collaboration with postdoc officer Professor Mark Bear, the department developed postdoc salary and benefit standards. Both initiatives have become models for the Institute. In recent months, DiCarlo partnered with new associate department head Professor Laura Schulz to constructively focus renewed energy and resources on initiatives to address systemic racism and promote diversity, equity, inclusion, and social justice.

“Looking ahead, I share Jim’s vision for the research and educational programs of the department, and for enhancing its cohesiveness as a community, especially with regard to issues of diversity, equity, inclusion, and justice,” says Mavalvala. “I am deeply committed to supporting his successor in furthering these goals while maintaining the great intellectual strength of BCS.”

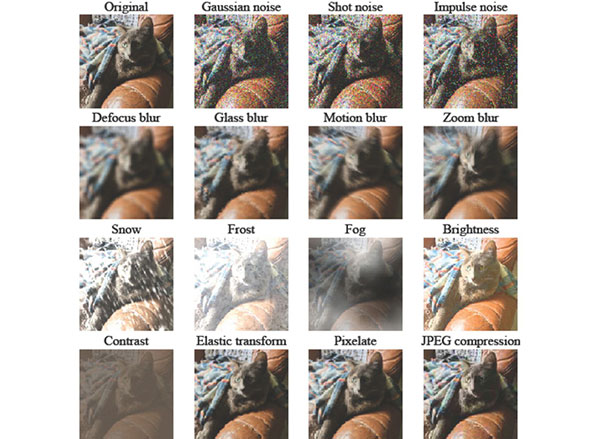

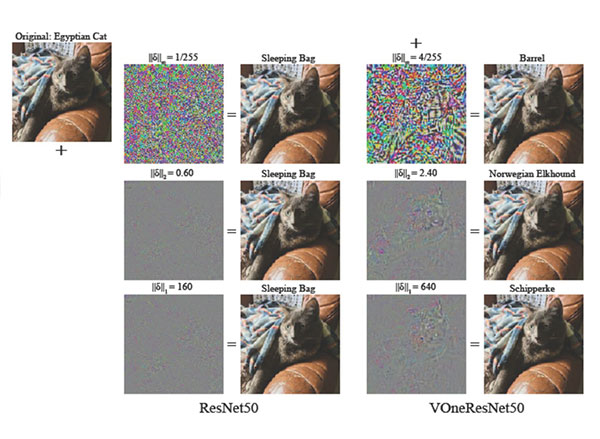

In his own research, DiCarlo uses a combination of large-scale neurophysiology, brain imaging, optogenetic methods, and high-throughput computational simulations to understand the neuronal mechanisms and cortical computations that underlie human visual intelligence. Working in animal models, he and his research collaborators have established precise connections between the internal workings of the visual system and the internal workings of particular computer vision systems. And they have demonstrated that these science-to-engineering connections lead to new ways to modulate neurons deep in the brain as well as to improved machine vision systems. His lab’s goals are to help develop more human-like machine vision, new neural prosthetics to restore or augment lost senses, new learning strategies, and an understanding of how visual cognition is impaired in agnosia, autism, and dyslexia.

DiCarlo earned both a PhD in biomedical engineering and an MD from The Johns Hopkins University in 1998, and completed his postdoc training in primate visual neurophysiology at Baylor College of Medicine. He joined the MIT faculty in 2002.

A search committee will convene early this year to recommend candidates for the next department head of BCS. DiCarlo will continue to lead the department until that new head is selected.