Many children struggle to learn to read, and studies have shown that students from a lower socioeconomic status (SES) background are more likely to have difficulty than those from a higher SES background.

MIT neuroscientists have now discovered that the types of difficulties that lower-SES students have with reading, and the underlying brain signatures, are, on average, different from those of higher-SES students who struggle with reading.

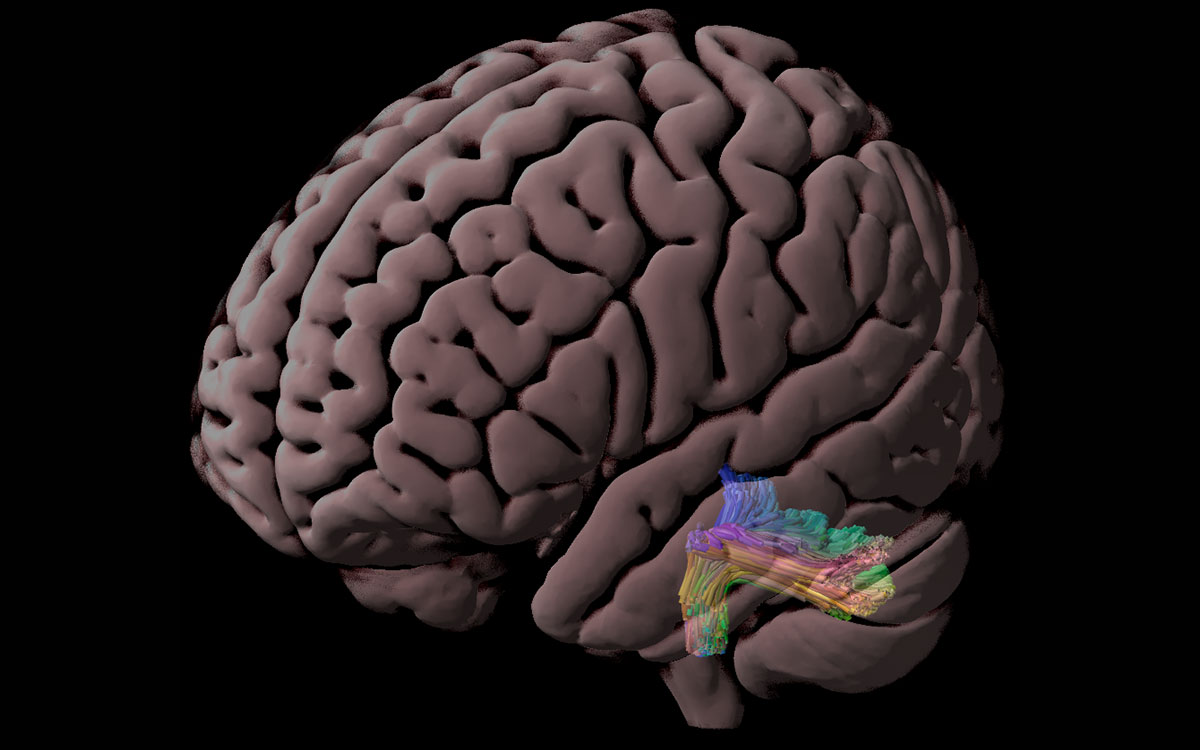

In a new study, which included brain scans of more than 150 children as they performed tasks related to reading, researchers found that when students from higher SES backgrounds struggled with reading, it could usually be explained by differences in their ability to piece sounds together into words, a skill known as phonological processing.

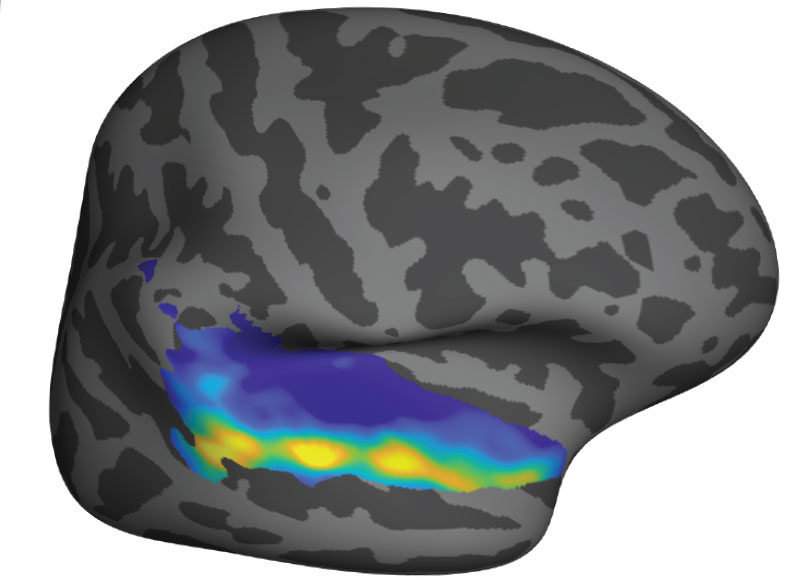

However, when students from lower SES backgrounds struggled, it was best explained by differences in their ability to rapidly name words or letters, a task associated with orthographic processing, or visual interpretation of words and letters. This pattern was further confirmed by brain activation during phonological and orthographic processing.

These differences suggest that different types of interventions may needed for different groups of children, the researchers say. The study also highlights the importance of including a wide range of SES levels in studies of reading or other types of academic learning.

“Within the neuroscience realm, we tend to rely on convenience samples of participants, so a lot of our understanding of the neuroscience components of reading in general, and reading disabilities in particular, tends to be based on higher-SES families,” says Rachel Romeo, a former graduate student in the Harvard-MIT Program in Health Sciences and Technology and the lead author of the study. “If we only look at these nonrepresentative samples, we can come away with a relatively biased view of how the brain works.”

Romeo is now an assistant professor in the Department of Human Development and Quantitative Methodology at the University of Maryland. John Gabrieli, the Grover Hermann Professor of Health Sciences and Technology and a professor of brain and cognitive sciences at MIT, is the senior author of the paper, which appears today in the journal Developmental Cognitive Neuroscience.

Components of reading

For many years, researchers have known that children’s scores on standardized assessments of reading are correlated with socioeconomic factors such as school spending per student or the number of children at the school who qualify for free or reduced-price lunches.

Studies of children who struggle with reading, mostly done in higher-SES environments, have shown that the aspect of reading they struggle with most is phonological awareness: the understanding of how sounds combine to make a word, and how sounds can be split up and swapped in or out to make new words.

“That’s a key component of reading, and difficulty with phonological processing is often one of the hallmarks of dyslexia or other reading disorders,” Romeo says.

In the new study, the MIT team wanted to explore how SES might affect phonological processing as well as another key aspect of reading, orthographic processing. This relates more to the visual components of reading, including the ability to identify letters and read words.

To do the study, the researchers recruited first and second grade students from the Boston area, making an effort to include a range of SES levels. For the purposes of this study, SES was assessed by parents’ total years of formal education, which is commonly used as a measure of the family’s SES.

“We went into this not necessarily with any hypothesis about how SES might relate to the two types of processing, but just trying to understand whether SES might be impacting one or the other more, or if it affects both types the same,” Romeo says.

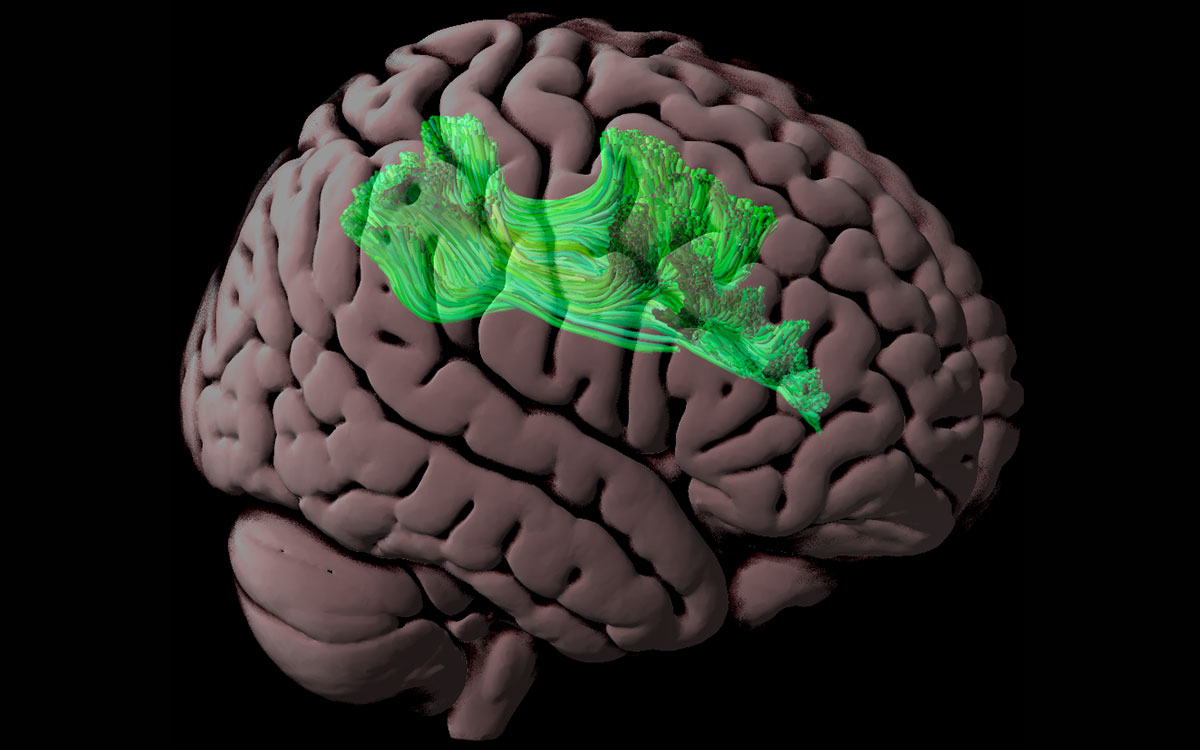

The researchers first gave each child a series of standardized tests designed to measure either phonological processing or orthographic processing. Then, they performed fMRI scans of each child while they carried out additional phonological or orthographic tasks.

The initial series of tests allowed the researchers to determine each child’s abilities for both types of processing, and the brain scans allowed them to measure brain activity in parts of the brain linked with each type of processing.

The results showed that at the higher end of the SES spectrum, differences in phonological processing ability accounted for most of the differences between good readers and struggling readers. This is consistent with the findings of previous studies of reading difficulty. In those children, the researchers also found greater differences in activity in the parts of the brain responsible for phonological processing.

However, the outcomes were different when the researchers analyzed the lower end of the SES spectrum. There, the researchers found that variance in orthographic processing ability accounted for most of the differences between good readers and struggling readers. MRI scans of these children revealed greater differences in brain activity in parts of the brain that are involved in orthographic processing.

Optimizing interventions

There are many possible reasons why a lower SES background might lead to difficulties in orthographic processing, the researchers say. It might be less exposure to books at home, or limited access to libraries and other resources that promote literacy. For children from this background who struggle with reading, different types of interventions might benefit them more than the ones typically used for children who have difficulty with phonological processing.

In a 2017 study, Gabrieli, Romeo, and others found that a summer reading intervention that focused on helping students develop the sensory and cognitive processing necessary for reading was more beneficial for students from lower-SES backgrounds than children from higher-SES backgrounds. Those findings also support the idea that tailored interventions may be necessary for individual students, they say.

“There are two major reasons we understand that cause children to struggle as they learn to read in these early grades. One of them is learning differences, most prominently dyslexia, and the other one is socioeconomic disadvantage,” Gabrieli says. “In my mind, schools have to help all these kinds of kids become the best readers they can, so recognizing the source or sources of reading difficulty ought to inform practices and policies that are sensitive to these differences and optimize supportive interventions.”

Gabrieli and Romeo are now working with researchers at the Harvard University Graduate School of Education to evaluate language and reading interventions that could better prepare preschool children from lower SES backgrounds to learn to read. In her new lab at the University of Maryland, Romeo also plans to further delve into how different aspects of low SES contribute to different areas of language and literacy development.

“No matter why a child is struggling with reading, they need the education and the attention to support them. Studies that try to tease out the underlying factors can help us in tailoring educational interventions to what a child needs,” she says.

The research was funded by the Ellison Medical Foundation, the Halis Family Foundation, and the National Institutes of Health.