Sylvia Abente, neuróloga clínica de la Universidad Nacional de Asunción (Paraguay), investiga la variedad de síntomas que son característicos de la epilepsia. Trabaja con los pueblos indígenas de Paraguay, y su dominio del español y el guaraní, los dos idiomas oficiales de Paraguay, le permite ayudar a los pacientes a encontrar las palabras que ayuden a describir sus síntomas de epilepsia para poder tratarlos.

Juan Carlos Caicedo Mera, neurocientífico de la Universidad Externado de Colombia, utiliza modelos de roedores para investigar los efectos neurobiológicos del estrés en los primeros años de vida. Ha desempeñado un papel decisivo en despertar la conciencia pública sobre los efectos biológicos y conductuales del castigo físico a edades tempranas, lo que ha propiciado cambios políticos encaminados a reducir su prevalencia como práctica cultural en Colombia.

Estos son solo dos de los 33 neurocientíficos de siete países latinoamericanos que Jessica Chomik-Morales entrevistó durante 37 días para la tercera temporada de su podcast en español “Mi Última Neurona,” que se estrenará el 18 de septiembre a las 5:00 p. m. en YouTube. Cada episodio dura entre 45 y 90 minutos.

“Quise destacar sus historias para disipar la idea errónea de que la ciencia de primer nivel solo puede hacerse en Estados Unidos y Europa,” dice Chomik-Morales, “o que no se consigue en Sudamérica debido a barreras financieras y de otro tipo.”

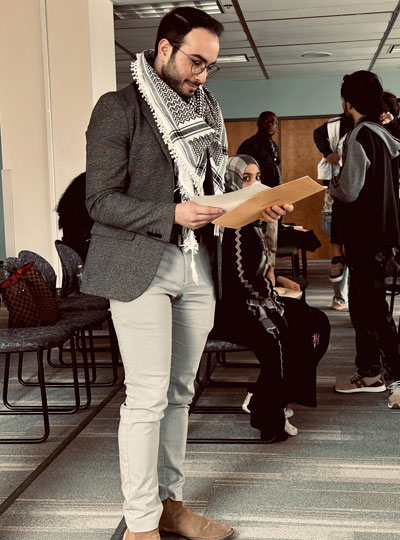

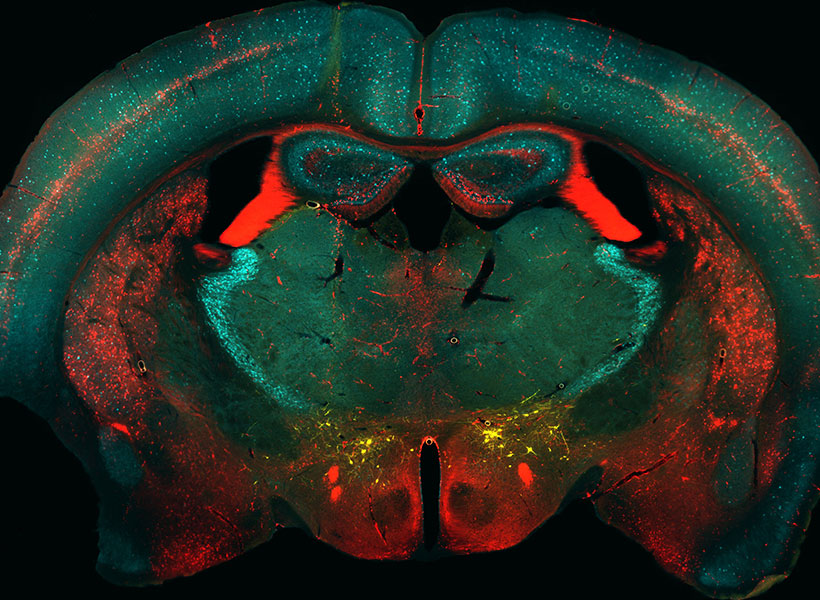

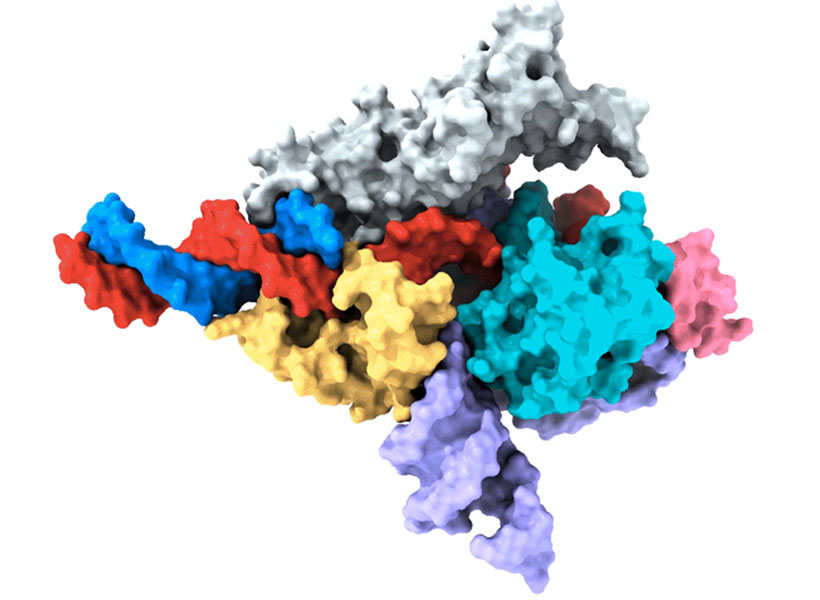

Chomik-Morales, graduada universitaria de primera generación que creció en Asunción (Paraguay) y Boca Ratón (Florida), es ahora investigadora académica de post licenciatura en el MIT. Aquí trabaja con Laura Schulz, profesora de Ciencia Cognitiva, y Nancy Kanwisher, investigadora del McGovern Institute y la profesora Walter A. Rosenblith de Neurociencia Cognitiva, utilizando imágenes cerebrales funcionales para investigar de qué forma el cerebro explica el pasado, predice el futuro e interviene sobre el presente a traves del razonamiento causal.

“El podcast está dirigido al público en general y es apto para todas las edades,” afirma. “Se explica la neurociencia de forma fácil para inspirar a los jóvenes en el sentido de que ellos también pueden llegar a ser científicos y para mostrar la amplia variedad de investigaciones que se realizan en los países de origen de los escuchas.”

El viaje de toda una vida

“Mi Última Neurona” comenzó como una idea en 2021 y creció rápidamente hasta convertirse en una serie de conversaciones con destacados científicos hispanos, entre ellos L. Rafael Reif, ingeniero electricista venezolano-estadounidense y 17.º presidente del MIT.

Con las relaciones profesionales que estableció en las temporadas uno y dos, Chomik-Morales amplió su visión y reunió una lista de posibles invitados en América Latina para la tercera temporada. Con la ayuda de su asesor científico, Héctor De Jesús-Cortés, un investigador Boricua de posdoctorado del MIT, y el apoyo financiero del McGovern Institute, el Picower Institute for Learning and Memory, el Departamento de Ciencias Cerebrales y Cognitivas, y las Iniciativas Internacionales de Ciencia y Tecnología del MIT, Chomik-Morales organizó entrevistas con científicos en México, Perú, Colombia, Chile, Argentina, Uruguay y Paraguay durante el verano de 2023.

Viajando en avión cada cuatro o cinco días, y consiguiendo más posibles participantes de una etapa del viaje a la siguiente por recomendación, Chomik-Morales recorrió más de 10,000 millas y recopiló 33 historias para su tercera temporada. Las áreas de especialización de los científicos abarcan toda una variedad de temas, desde los aspectos sociales de los ciclos de sueño y vigilia hasta los trastornos del estado de ánimo y la personalidad, pasando por la lingüística y el lenguaje en el cerebro o el modelado por computadoras como herramienta de investigación.

“Si alguien estudia la depresión y la ansiedad, quiero hablar sobre sus opiniones con respecto a diversas terapias, incluidos los fármacos y también las microdosis con alucinógenos,” dice Chomik-Morales. “Estas son las cosas de las que habla la gente.” No le teme a abordar temas delicados, como la relación entre las hormonas y la orientación sexual, porque “es importante que la gente escuche a los expertos hablar de estas cosas,” comenta.

El tono de las entrevistas va de lo informal (“el investigador y yo somos como amigos”, dice) a lo pedagógico (“de profesor a alumno”). Lo que no cambia es la accesibilidad (se evitan términos técnicos) y las preguntas iniciales y finales en cada entrevista. Para empezar: “¿Cómo ha llegado hasta aquí? ¿Qué le atrajo de la neurociencia?”. Para terminar: “¿Qué consejo le daría a un joven estudiante latino interesado en Ciencias, Ingeniería, Tecnología y Matemáticas[1]?

Permite que el marco de referencia de sus escuchas sea lo que la guíe. “Si no entendiera algo o pensara que se podría explicar mejor, diría: ‘Hagamos una pausa’. ¿Qué significa esta palabra?”, aunque ella conociera la definición. Pone el ejemplo de la palabra “MEG” (magnetoencefalografía): la medición del campo magnético generado por la actividad eléctrica de las neuronas, que suele combinarse con la resonancia magnética para producir imágenes de fuentes magnéticas. Para aterrizar el concepto, preguntaría: “¿Cómo funciona? ¿Este tipo de exploración hace daño al paciente?”.

Allanar el camino para la creación de redes globales

El equipo de Chomik-Morales era escaso: tres micrófonos Yeti y una cámara de video Canon conectada a su computadora portátil. Las entrevistas se realizaban en salones de clase, oficinas universitarias, en la casa de los investigadores e incluso al aire libre, ya que no había estudios insonorizados disponibles. Ha estado trabajando con el ingeniero de sonido David Samuel Torres, de Puerto Rico, para obtener un sonido más claro.

Ninguna limitación tecnológica podía ocultar la importancia del proyecto para los científicos participantes.

“Mi Última Neurona” muestra nuestro conocimiento diverso en un escenario global, proporcionando un retrato más preciso del panorama científico en América Latina,” dice Constanza Baquedano, originaria de Chile. “Es un avance hacia la creación de una representación más inclusiva en la ciencia”. Baquendano es profesora adjunta de psicología en la Universidad Adolfo Ibáñez, en donde utiliza electrofisiología y mediciones electroencefalográficas y conductuales para investigar la meditación y otros estados contemplativos. “Estaba ansiosa por ser parte de un proyecto que buscara brindar reconocimiento a nuestras experiencias compartidas como mujeres latinoamericanas en el campo de la neurociencia.”

“Comprender los retos y las oportunidades de los neurocientíficos que trabajan en América Latina es primordial,” afirma Agustín Ibáñez, profesor y director del Instituto Latinoamericano de Salud Cerebral (BrainLat) de la Universidad Adolfo Ibáñez de Chile. “Esta región, que se caracteriza por tener importantes desigualdades que afectan la salud cerebral, también presenta desafíos únicos en el campo de la neurociencia,” afirma Ibáñez, quien se interesa principalmente en la intersección de la neurociencia social, cognitiva y afectiva. “Al centrarse en América Latina, el podcast da a conocer las historias que frecuentemente no se cuentan en la mayoría de los medios. Eso tiende puentes y allana el camino para la creación de redes globales.”

Por su parte, Chomik-Morales confía en que su podcast generará un gran número de seguidores en América Latina. “Estoy muy agradecida por el espléndido patrocinio del MIT,” dice Chomik-Morales. “Este es el proyecto más gratificante que he hecho en mi vida.”

__

[1] En inglés Science, Technology, Engineering and Mathematics (STEM)