Nitric oxide is an important signaling molecule in the body, with a role in building nervous system connections that contribute to learning and memory. It also functions as a messenger in the cardiovascular and immune systems.

But it has been difficult for researchers to study exactly what its role is in these systems and how it functions. Because it is a gas, there has been no practical way to direct it to specific individual cells in order to observe its effects. Now, a team of scientists and engineers at MIT and elsewhere has found a way of generating the gas at precisely targeted locations inside the body, potentially opening new lines of research on this essential molecule’s effects.

The findings are reported today in the journal Nature Nanotechnology, in a paper by MIT professors Polina Anikeeva, Karthish Manthiram, and Yoel Fink; graduate student Jimin Park; postdoc Kyoungsuk Jin; and 10 others at MIT and in Taiwan, Japan, and Israel.

“It’s a very important compound,” says Anikeeva, who is also an Investigator at the McGovern Institute. But figuring out the relationships between the delivery of nitric oxide to particular cells and synapses, and the resulting higher-level effects on the learning process has been difficult. So far, most studies have resorted to looking at systemic effects, by knocking out genes responsible for the production of enzymes the body uses to produce nitric oxide where it’s needed as a messenger.

But that approach, she says, is “very brute force. This is a hammer to the system because you’re knocking it out not just from one specific region, let’s say in the brain, but you essentially knock it out from the entire organism, and this can have other side effects.”

Others have tried introducing compounds into the body that release nitric oxide as they decompose, which can produce somewhat more localized effects, but these still spread out, and it is a very slow and uncontrolled process.

The team’s solution uses an electric voltage to drive the reaction that produces nitric oxide. This is similar to what is happening on a much larger scale with some industrial electrochemical production processes, which are relatively modular and controllable, enabling local and on-demand chemical synthesis. “We’ve taken that concept and said, you know what? You can be so local and so modular with an electrochemical process that you can even do this at the level of the cell,” Manthiram says. “And I think what’s even more exciting about this is that if you use electric potential, you have the ability to start production and stop production in a heartbeat.”

The team’s key achievement was finding a way for this kind of electrochemically controlled reaction to be operated efficiently and selectively at the nanoscale. That required finding a suitable catalyst material that could generate nitric oxide from a benign precursor material. They found that nitrite offered a promising precursor for electrochemical nitric oxide generation.

“We came up with the idea of making a tailored nanoparticle to catalyze the reaction,” Jin says. They found that the enzymes that catalyze nitric oxide generation in nature contain iron-sulfur centers. Drawing inspiration from these enzymes, they devised a catalyst that consisted of nanoparticles of iron sulfide, which activates the nitric oxide-producing reaction in the presence of an electric field and nitrite. By further doping these nanoparticles with platinum, the team was able to enhance their electrocatalytic efficiency.

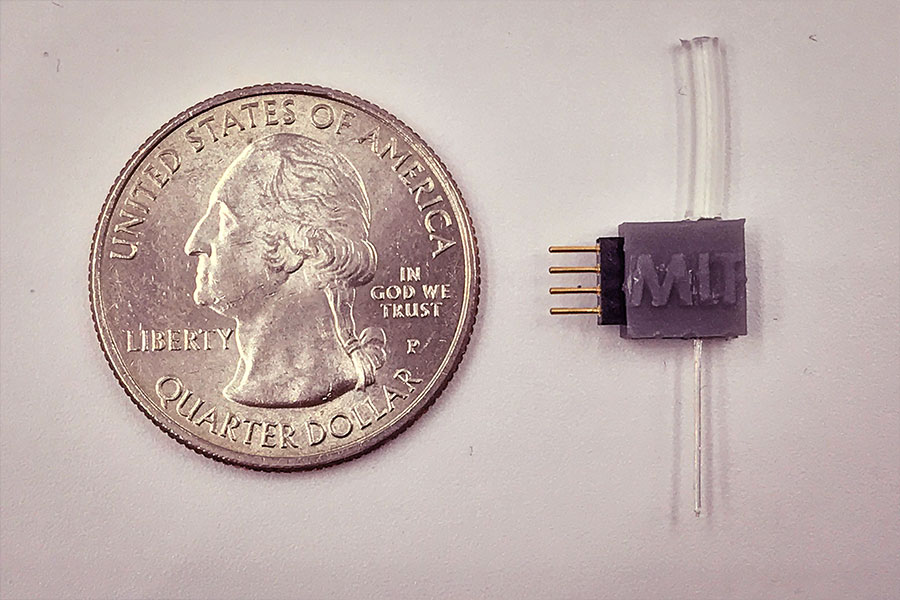

To miniaturize the electrocatalytic cell to the scale of biological cells, the team has created custom fibers containing the positive and negative microelectrodes, which are coated with the iron sulfide nanoparticles, and a microfluidic channel for the delivery of sodium nitrite, the precursor material. When implanted in the brain, these fibers direct the precursor to the specific neurons. Then the reaction can be activated at will electrochemically, through the electrodes in the same fiber, producing an instant burst of nitric oxide right at that spot so that its effects can be recorded in real-time.

Photo: Anikeeva Lab

As a test, they used the system in a rodent model to activate a brain region that is known to be a reward center for motivation and social interaction, and that plays a role in addiction. They showed that it did indeed provoke the expected signaling responses, demonstrating its effectiveness.

Anikeeva says this “would be a very useful biological research platform, because finally, people will have a way to study the role of nitric oxide at the level of single cells, in whole organisms that are performing tasks.” She points out that there are certain disorders that are associated with disruptions of the nitric oxide signaling pathway, so more detailed studies of how this pathway operates could help lead to treatments.

The method could be generalizable, Park says, as a way of producing other molecules of biological interest within an organism. “Essentially we can now have this really scalable and miniaturized way to generate many molecules, as long as we find the appropriate catalyst, and as long as we find an appropriate starting compound that is also safe.” This approach to generating signaling molecules in situ could have wide applications in biomedicine, he says.

“One of our reviewers for this manuscript pointed out that this has never been done — electrolysis in a biological system has never been leveraged to control biological function,” Anikeeva says. “So, this is essentially the beginning of a field that could potentially be very useful” to study molecules that can be delivered at precise locations and times, for studies in neurobiology or any other biological functions. That ability to make molecules on demand inside the body could be useful in fields such as immunology or cancer research, she says.

The project got started as a result of a chance conversation between Park and Jin, who were friends working in different fields — neurobiology and electrochemistry. Their initial casual discussions ended up leading to a full-blown collaboration between several departments. But in today’s locked-down world, Jin says, such chance encounters and conversations have become less likely. “In the context of how much the world has changed, if this were in this era in which we’re all apart from each other, and not in 2018, there is some chance that this collaboration may just not ever have happened.”

“This work is a milestone in bioelectronics,” says Bozhi Tian, an associate professor of chemistry at the University of Chicago, who was not connected to this work. “It integrates nanoenabled catalysis, microfluidics, and traditional bioelectronics … and it solves a longstanding challenge of precise neuromodulation in the brain, by in situ generation of signaling molecules. This approach can be widely adopted by the neuroscience community and can be generalized to other signaling systems, too.”

Besides MIT, the team included researchers at National Chiao Tung University in Taiwan, NEC Corporation in Japan, and the Weizman Institute of Science in Israel. The work was supported by the National Institute for Neurological Disorders and Stroke, the National Institutes of Health, the National Science Foundation, and MIT’s Department of Chemical Engineering.