The School of Science has announced that 14 of its faculty members have been appointed to named professorships. The faculty members selected for these positions receive additional support to pursue their research and develop their careers.

Riccardo Comin is an assistant professor in the Department of Physics. He has been named a Class of 1947 Career Development Professor. This three-year professorship is granted in recognition of the recipient’s outstanding work in both research and teaching. Comin is interested in condensed matter physics. He uses experimental methods to synthesize new materials, as well as analysis through spectroscopy and scattering to investigate solid state physics. Specifically, the Comin lab attempts to discover and characterize electronic phases of quantum materials. Recently, his lab, in collaboration with colleagues, discovered that weaving a conductive material into a particular pattern known as the “kagome” pattern can result in quantum behavior when electricity is passed through.

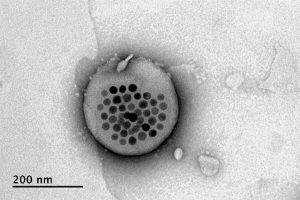

Joseph Davis, assistant professor in the Department of Biology, has been named a Whitehead Career Development Professor. He looks at how cells build and deconstruct complex molecular machinery. The work of his lab group relies on biochemistry, biophysics, and structural approaches that include spectrometry and microscopy. A current project investigates the formation of the ribosome, an essential component in all cells. His work has implications for metabolic engineering, drug delivery, and materials science.

Lawrence Guth is now the Claude E. Shannon (1940) Professor of Mathematics. Guth explores harmonic analysis and combinatorics, and he is also interested in metric geometry and identifying connections between geometric inequalities and topology. The subject of metric geometry revolves around being able to estimate measurements, including length, area, volume and distance, and combinatorial geometry is essentially the estimation of the intersection of patters in simple shapes, including lines and circles.

Michael Halassa, an assistant professor in the Department of Brain and Cognitive Sciences, will hold the three-year Class of 1958 Career Development Professorship. His area of interest is brain circuitry. By investigating the networks and connections in the brain, he hopes to understand how they operate — and identify any ways in which they might deviate from normal operations, causing neurological and psychiatric disorders. Several publications from his lab discuss improvements in the treatment of the deleterious symptoms of autism spectrum disorder and schizophrenia, and his latest news provides insights on how the brain filters out distractions, particularly noise. Halassa is an associate investigator at the McGovern Institute for Brain Research and an affiliate member of the Picower Institute for Learning and Memory.

Sebastian Lourido, an assistant professor and the new Latham Family Career Development Professor in the Department of Biology for the next three years, works on treatments for infectious disease by learning about parasitic vulnerabilities. Focusing on human pathogens, Lourido and his lab are interested in what allows parasites to be so widespread and deadly, looking on a molecular level. This includes exploring how calcium regulates eukaryotic cells, which, in turn, affect processes such as muscle contraction and membrane repair, in addition to kinase responses.

Brent Minchew is named a Cecil and Ida Green Career Development Professor for a three-year term. Minchew, a faculty member in the Department of Earth, Atmospheric and Planetary Sciences, studies glaciers using remote sensing methods, such as interferometric synthetic aperture radar. His research into glaciers, including their mechanics, rheology, and interactions with their surrounding environment, extends as far as observing their responses to climate change. His group recently determined that Antarctica, in a worst-case scenario climate projection, would not contribute as much as predicted to rising sea level.

Elly Nedivi, a professor in the departments of Brain and Cognitive Sciences and Biology, has been named the inaugural William R. (1964) And Linda R. Young Professor. She works on brain plasticity, defined as the brain’s ability to adapt with experience, by identifying genes that play a role in plasticity and their neuronal and synaptic functions. In one of her lab’s recent publications, they suggest that variants of a particular gene may undermine expression or production of a protein, increasing the risk of bipolar disorder. In addition, she collaborates with others at MIT to develop new microscopy tools that allow better analysis of brain connectivity. Nedivi is also a member of the Picower Institute for Learning and Memory.

Andrei Negut has been named a Class of 1947 Career Development Professor for a three-year term. Negut, a member of the Department of Mathematics, fixates on problems in geometric representation theory. This topic requires investigation within algebraic geometry and representation theory simultaneously, with implications for mathematical physics, symplectic geometry, combinatorics and probability theory.

Matĕj Peč, the Victor P. Starr Career Development Professor in the Department of Earth, Atmospheric and Planetary Science until 2021, studies how the movement of the Earth’s tectonic plates affects rocks, mechanically and microstructurally. To investigate such a large-scale topic, he utilizes high-pressure, high-temperature experiments in a lab to simulate the driving forces associated with plate motion, and compares results with natural observations and theoretical modeling. His lab has identified a particular boundary beneath the Earth’s crust where rock properties shift from brittle, like peanut brittle, to viscous, like honey, and determined how that layer accommodates building strain between the two. In his investigations, he also considers the effect on melt generation miles underground.

Kerstin Perez has been named the three-year Class of 1948 Career Development Professor in the Department of Physics. Her research interest is dark matter. She uses novel analytical tools, such as those affixed on a balloon-borne instrument that can carry out processes similar to that of a particle collider (like the Large Hadron Collider) to detect new particle interactions in space with the help of cosmic rays. In another research project, Perez uses a satellite telescope array on Earth to search for X-ray signatures of mysterious particles. Her work requires heavy involvement with collaborative observatories, instruments, and telescopes. Perez is affiliated with the Kavli Institute for Astrophysics and Space Research.

Bjorn Poonen, named a Distinguished Professor of Science in the Department of Mathematics, studies number theory and algebraic geometry. He, his colleagues, and his lab members generate algorithms that can solve polynomial equations with the particular requirement that the solutions be rational numbers. These types of problems can be useful in encoding data. He also helps to determine what is undeterminable, that is exploring the limits of computing.

Daniel Suess, named a Class of 1948 Career Development Professor in the Department of Chemistry, uses molecular chemistry to explain global biogeochemical cycles. In the fields of inorganic and biological chemistry, Suess and his lab look into understanding complex and challenging reactions and clustering of particular chemical elements and their catalysts. Most notably, these reactions include those that are essential to solar fuels. Suess’s efforts to investigate both biological and synthetic systems have broad aims of both improving human health and decreasing environmental impacts.

Alison Wendlandt is the new holder of the five-year Cecil and Ida Green Career Development Professorship. In the Department of Chemistry, the Wendlandt research group focuses on physical organic chemistry and organic and organometallic synthesis to develop reaction catalysts. Her team fixates on designing new catalysts, identifying processes to which these catalysts can be applied, and determining principles that can expand preexisting reactions. Her team’s efforts delve into the fields of synthetic organic chemistry, reaction kinetics, and mechanics.

Julien de Wit, a Department of Earth, Atmospheric and Planetary Sciences assistant professor, has been named a Class of 1954 Career Development Professor. He combines math and science to answer questions about big-picture planetary questions. Using data science, de Wit develops new analytical techniques for mapping exoplanetary atmospheres, studies planet-star interactions of planetary systems, and determines atmospheric and planetary properties of exoplanets from spectroscopic information. He is a member of the scientific team involved in the Search for habitable Planets EClipsing ULtra-cOOl Stars (SPECULOOS) TRANsiting Planets and Planetesimals Small Telescope (TRAPPIST), made up of an international collection of observatories. He is affiliated with the Kavli Institute.