The McGovern Institute for Brain Research announced today that Harvard neurobiologist David D. Ginty has been selected for the 2022 Edward M. Scolnick Prize in Neuroscience. Ginty, who is the Edward R. and Anne G. Lefler Professor of Neurobiology at Harvard Medical School, is being recognized for his fundamental discoveries into the neural mechanisms underlying the sense of touch. The Scolnick Prize is awarded annually by the McGovern Institute for outstanding advances in neuroscience.

“David Ginty has made seminal contributions in basic research that also have important translational implications,” says Robert Desimone, McGovern Institute Director and chair of the selection committee. “His rigorous research has led us to understand how the peripheral nervous system encodes the overall perception of touch, and how molecular mechanisms underlying this can fail in disease states.”

Ginty obtained his PhD in 1989 with Edward Seidel where he studied cell proliferation factors. He went on to a postdoctoral fellowship researching nerve growth factor with John Wagner at the Dana-Farber Cancer Institute and, upon Wagner’s departure to Cornell, transferred to Michael Greenberg’s lab at Harvard Medical School. There, he dissected intracellular signaling pathways for neuronal growth factors and neurotransmitters and developed key antibody reagents to detect activated forms of transcription factors. These antibody tools are now used by labs around the world in the research of neuronal plasticity and brain disorders, including Alzheimer’s disease and schizophrenia.

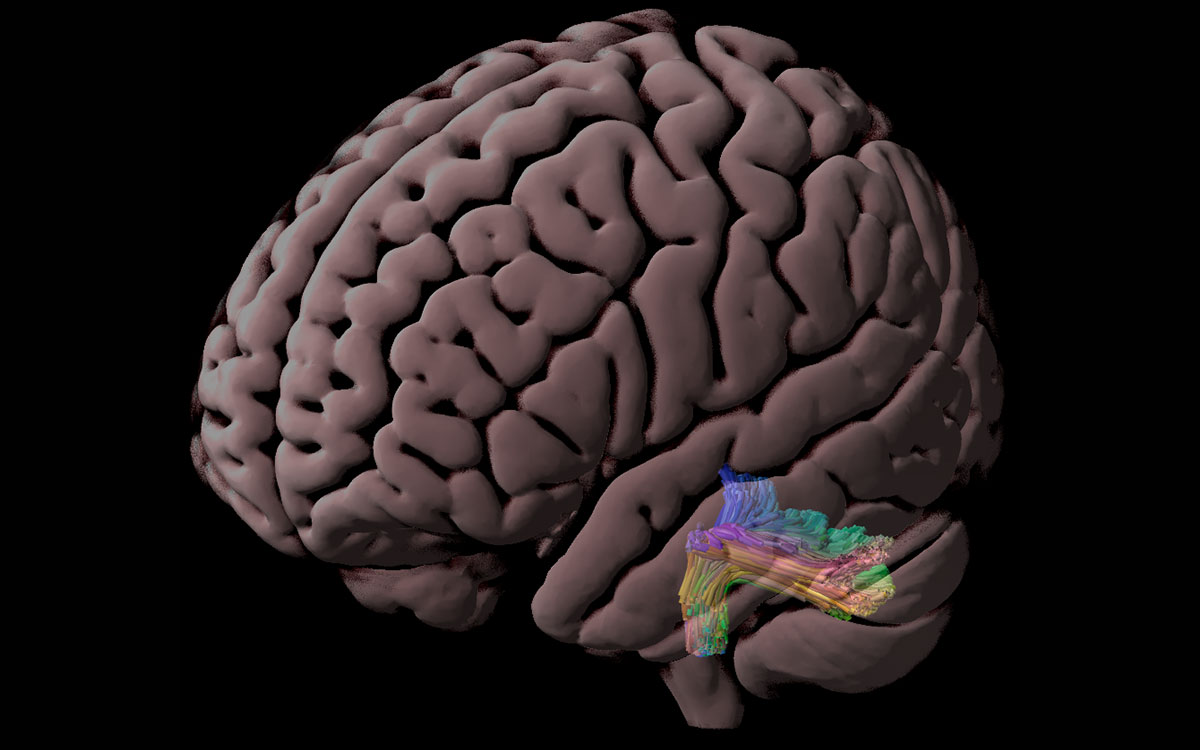

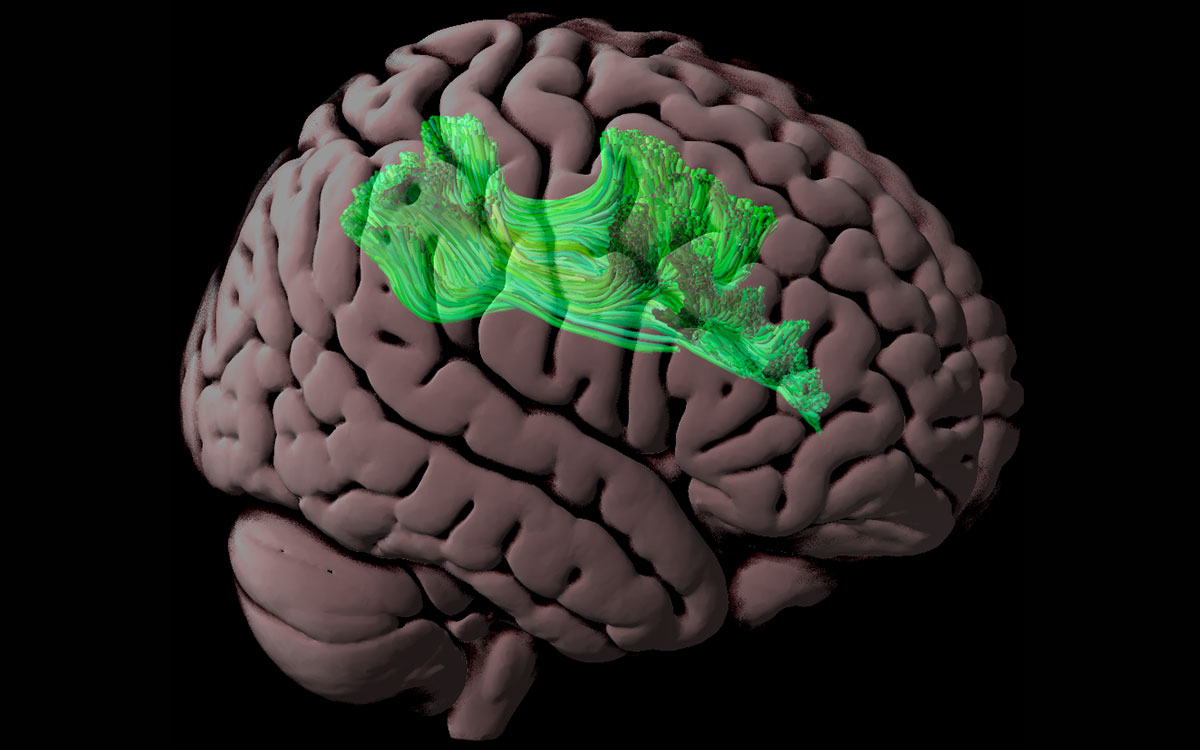

In 1995, Ginty started his own laboratory at Johns Hopkins University with a focus on the development and functional organization of the peripheral nervous system. Ginty’s group created and applied the latest genetic engineering techniques in mice to uncover how the peripheral nervous system develops and is organized at the molecular, cellular and circuit levels to perceive touch. Most notably, using gene targeting combined with electrophysiological, behavioral and anatomical analyses, the Ginty lab untangled properties and functions of the different types of touch neurons, termed low- and high-threshold mechanoreceptors, that convey distinct aspects of stimulus information from the skin to the central nervous system. Ginty and colleagues also discovered organizational principles of spinal cord and brainstem circuits dedicated to touch information processing, and that integration of signals from the different mechanoreceptor types begins within spinal cord networks before signal transmission to the brain.

In 2013, Ginty joined the faculty of Harvard Medical School where his team applied their genetic tools and techniques to probe the neural basis of touch sensitivity disorders. They discovered properties and functions of peripheral sensory neurons, spinal cord circuits, and ascending pathways that transmit noxious, painful stimuli from the periphery to the brain. They also asked whether abnormalities in peripheral nervous system function lead to touch over-reactivity in cases of autism or in neuropathic pain caused by nerve injury, chemotherapy, or diabetes, where even a soft touch can be aversive or painful. His team found that sensory abnormalities observed in several mouse models of autism spectrum disorder could be traced to peripheral mechanosensory neurons. They also found that reducing the activity of peripheral sensory neurons prevented tactile over-reactivity in these models and even, in some cases, lessened anxiety and abnormal social behaviors. These findings provided a plausible explanation for how sensory dysfunction may contribute to physiological and cognitive impairments in autism. Importantly, this laid the groundwork for a new approach and initiative to identify new potential therapies for disorders of touch and pain.

Ginty was named a Howard Hughes Medical Institute Investigator in 2000 and was elected to the American Academy of Arts and Sciences in 2015 and the National Academy of Sciences in 2017. He shared Columbia University’s Alden W. Spencer Prize with Ardem Patapoutian in 2017 and was awarded the Society for Neuroscience Julius Axelrod Prize in 2021. Ginty is also known for exceptional mentorship. He directed the neuroscience graduate program at Johns Hopkins from 2006 to 2013 and now serves as the associate director of Harvard’s neurobiology graduate program.

The McGovern Institute will award the Scolnick Prize to Ginty on Wednesday, June 1, 2022. At 4:00 pm he will deliver a lecture entitled “The sensory neurons of touch: beauty is skin deep,” to be followed by a reception at the McGovern Institute, 43 Vassar Street (building 46, room 3002) in Cambridge. The event is free and open to the public; registration is required.