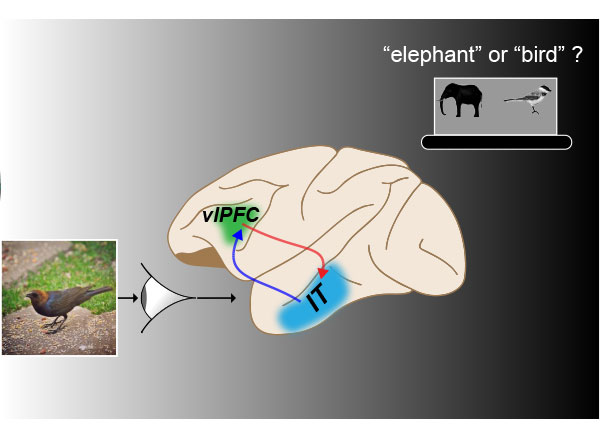

Our ability to pay attention, plan, and trouble-shoot involve cognitive processing by the brain’s prefrontal cortex. The balance of activity among excitatory and inhibitory neurons in the cortex, based on local neural circuits and distant inputs, is key to these cognitive functions.

A recent study from the McGovern Institute shows that excitatory inputs from the thalamus activate a local inhibitory circuit in the prefrontal cortex, revealing new insights into how these cognitive circuits may be controlled.

“For the field, systematic identification of these circuits is crucial in understanding behavioral flexibility and interpreting psychiatric disorders in terms of dysfunction of specific microcircuits,” says postdoctoral associate Arghya Mukherjee, lead author on the report.

Hub of activity

The thalamus is located in the center of the brain and is considered a cerebral hub based on its inputs from a diverse array of brain regions and outputs to the striatum, hippocampus, and cerebral cortex. More than 60 thalamic nuclei (cellular regions) have been defined and are broadly divided into “sensory” or “higher-order” thalamic regions based on whether they relay primary sensory inputs or instead have inputs exclusively from the cerebrum.

Considering the fundamental distinction between the input connections of the sensory and higher-order thalamus, Mukherjee, a researcher in the lab of Michael Halassa, the Class of 1958 Career Development Professor in MIT’s Department of Brain and Cognitive Sciences, decided to explore whether there are similarly profound distinctions in their outputs to the cerebral cortex.

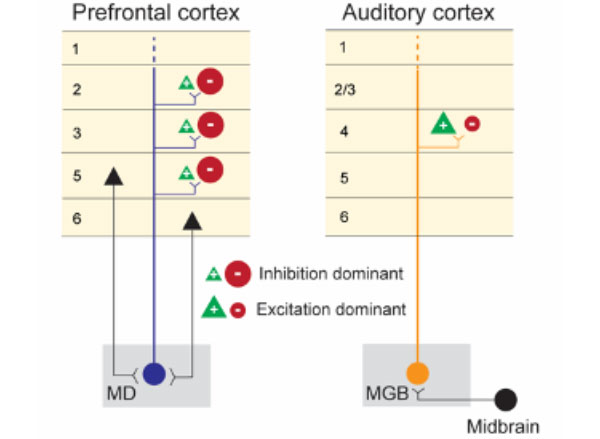

He addressed this question in mice by directly comparing the outputs of the medial geniculate body (MGB), a sensory thalamic region, and the mediodorsal thalamus (MD), a higher-order thalamic region. The researchers selected these two regions because the relatively accessible MGB nucleus relays auditory signals to cerebral cortical regions that process sound, and the MD interconnects regions of the prefrontal cortex.

Their study, now available as a preprint in eLife, describes key functional and anatomical differences between these two thalamic circuits. These findings build on Halassa’s previous work showing that outputs from higher-order thalamic nuclei play a central role in cognitive processing.

Circuit analysis

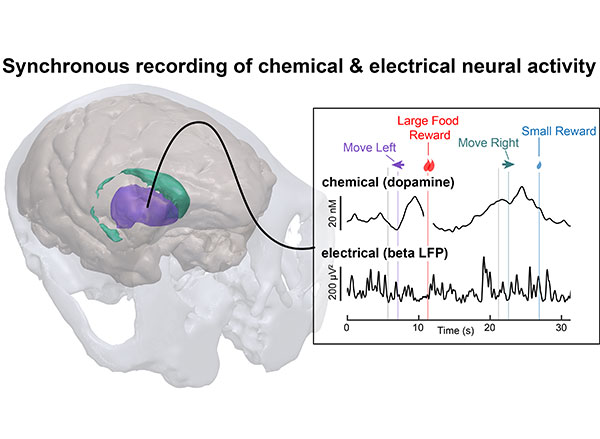

Using cutting-edge stimulation and recording methods, the researchers found that neurons in the prefrontal and auditory cortices have dramatically different responses to activation of their respective MD and MGB inputs.

The researchers stimulated the MD-prefrontal and MGB-auditory cortex circuits using optogenetic technology and recorded the response to this stimulation with custom multi-electrode scaffolds that hold independently movable micro-drives for recording hundreds of neurons in the cortex. When MGB neurons were stimulated with light, there was strong activation of neurons in the auditory cortex. By contrast, MD stimulation caused a suppression of neuron firing in the prefrontal cortex and concurrent activation of local inhibitory interneurons. The separate activation of the two thalamocortical circuits had dramatically different impacts on cortical output, with the sensory thalamus seeming to promote feed-forward activity and the higher-order thalamus stimulating inhibitory microcircuits within the cortical target region.

“The textbook view of the thalamus is an excitatory cortical input, and the fact that turning on a thalamic circuit leads to a net cortical inhibition was quite striking and not something you would have expected based on reading the literature,” says Halassa, who is also an associate investigator at the McGovern Institute. “Arghya and his colleagues did an amazing job following that up with detailed anatomy to explain why might this effect be so.”

Anatomical differences

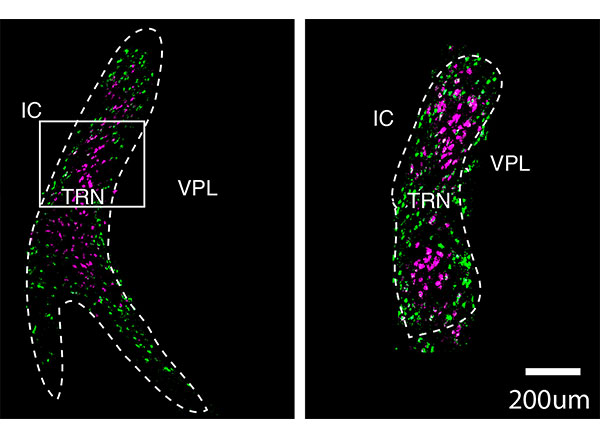

Using a system called GFP (green fluorescent protein) reconstitution across synaptic partners (mGRASP), the researchers demonstrated that MD and MGB projections target different types of cortical neurons, offering a possible explanation for their differing effects on cortical activity.

With mGRASP, the presynaptic terminal (in this case, MD or MGB) expresses one part of the fluorescent protein and the postsynaptic neuron (in this case, prefrontal or auditory cortex) expresses the other part of the fluorescent protein, which by themselves alone do not fluoresce. Only when there is a close synaptic connection do the two parts of GFP come together to become fluorescent. These experiments showed that MD neurons synapse more frequently onto inhibitory interneurons in the prefrontal cortex whereas MGB neurons synapse onto excitatory neurons with larger synapses, consistent with only MGB being a strong activity driver.

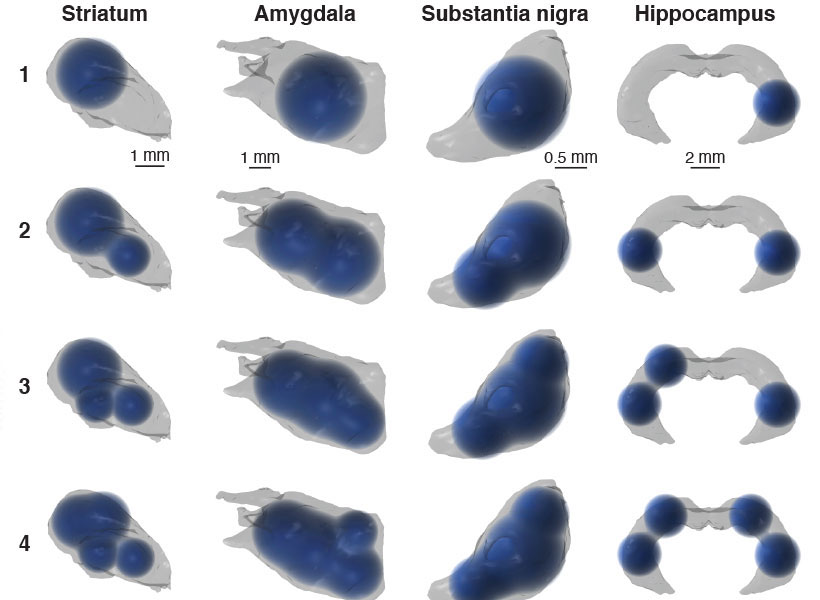

Using fluorescent viral vectors that can cross synapses of interconnected neurons, a technology developed by McGovern principal research scientist Ian Wickersham, the researchers were also able to map the inputs to the MD and MGB thalamic regions. Viruses, like rabies, are well-suited for tracing neural connections because they have evolved to spread from neuron to neuron through synaptic junctions.

The inputs to the targeted higher-order and sensory thalamocortical neurons identified across the brain appeared to arise respectively from forebrain and midbrain sensory regions, as expected. The MGB inputs were consistent with a sensory relay function, arising primarily from the auditory input pathway. By contrast, MD inputs arose from a wide array of cerebral cortical regions and basal ganglia circuits, consistent with MD receiving contextual and motor command information.

Direct comparisons

By directly comparing these microcircuits, the Halassa lab has revealed important clues about the function and anatomy of these sensory and higher-order brain connections. It is only through a systematic understanding of these circuits that we can begin to interpret how their dysfunction may contribute to psychiatric disorders like schizophrenia.

It is this basic scientific inquiry that often fuels their research, says Halassa. “Excitement about science is part of the glue that holds us all together.”