When neurons fire an electrical impulse, they also experience a surge of calcium ions. By measuring those surges, researchers can indirectly monitor neuron activity, helping them to study the role of individual neurons in many different brain functions.

One drawback to this technique is the crosstalk generated by the axons and dendrites that extend from neighboring neurons, which makes it harder to get a distinctive signal from the neuron being studied. MIT engineers have now developed a way to overcome that issue, by creating calcium indicators, or sensors, that accumulate only in the body of a neuron.

“People are using calcium indicators for monitoring neural activity in many parts of the brain,” says Edward Boyden, the Y. Eva Tan Professor in Neurotechnology and a professor of biological engineering and of brain and cognitive sciences at MIT. “Now they can get better results, obtaining more accurate neural recordings that are less contaminated by crosstalk.”

To achieve this, the researchers fused a commonly used calcium indicator called GCaMP to a short peptide that targets it to the cell body. The new molecule, which the researchers call SomaGCaMP, can be easily incorporated into existing workflows for calcium imaging, the researchers say.

Boyden is the senior author of the study, which appears today in Neuron. The paper’s lead authors are Research Scientist Or Shemesh, postdoc Changyang Linghu, and former postdoc Kiryl Piatkevich.

Molecular focus

The GCaMP calcium indicator consists of a fluorescent protein attached to a calcium-binding protein called calmodulin, and a calmodulin-binding protein called M13 peptide. GCaMP fluoresces when it binds to calcium ions in the brain, allowing researchers to indirectly measure neuron activity.

“Calcium is easy to image, because it goes from a very low concentration inside the cell to a very high concentration when a neuron is active,” says Boyden, who is also a member of MIT’s McGovern Institute for Brain Research, Media Lab, and Koch Institute for Integrative Cancer Research.

The simplest way to detect these fluorescent signals is with a type of imaging called one-photon microscopy. This is a relatively inexpensive technique that can image large brain samples at high speed, but the downside is that it picks up crosstalk between neighboring neurons. GCaMP goes into all parts of a neuron, so signals from the axons of one neuron can appear as if they are coming from the cell body of a neighbor, making the signal less accurate.

A more expensive technique called two-photon microscopy can partly overcome this by focusing light very narrowly onto individual neurons, but this approach requires specialized equipment and is also slower.

Boyden’s lab decided to take a different approach, by modifying the indicator itself, rather than the imaging equipment.

“We thought, rather than optically focusing light, what if we molecularly focused the indicator?” he says. “A lot of people use hardware, such as two-photon microscopes, to clean up the imaging. We’re trying to build a molecular version of what other people do with hardware.”

In a related paper that was published last year, Boyden and his colleagues used a similar approach to reduce crosstalk between fluorescent probes that directly image neurons’ membrane voltage. In parallel, they decided to try a similar approach with calcium imaging, which is a much more widely used technique.

To target GCaMP exclusively to cell bodies of neurons, the researchers tried fusing GCaMP to many different proteins. They explored two types of candidates — naturally occurring proteins that are known to accumulate in the cell body, and human-designed peptides — working with MIT biology Professor Amy Keating, who is also an author of the paper. These synthetic proteins are coiled-coil proteins, which have a distinctive structure in which multiple helices of the proteins coil together.

Less crosstalk

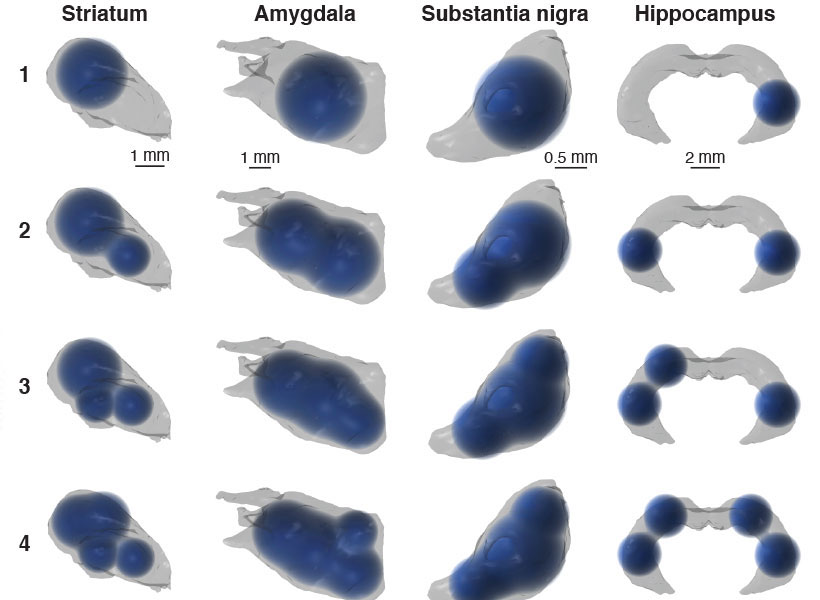

The researchers screened about 30 candidates in neurons grown in lab dishes, and then chose two — one artificial coiled-coil and one naturally occurring peptide — to test in animals. Working with Misha Ahrens, who studies zebrafish at the Janelia Research Campus, they found that both proteins offered significant improvements over the original version of GCaMP. The signal-to-noise ratio — a measure of the strength of the signal compared to background activity — went up, and activity between adjacent neurons showed reduced correlation.

In studies of mice, performed in the lab of Xue Han at Boston University, the researchers also found that the new indicators reduced the correlations between activity of neighboring neurons. Additional studies using a miniature microscope (called a microendoscope), performed in the lab of Kay Tye at the Salk Institute for Biological Studies, revealed a significant increase in signal-to-noise ratio with the new indicators.

“Our new indicator makes the signals more accurate. This suggests that the signals that people are measuring with regular GCaMP could include crosstalk,” Boyden says. “There’s the possibility of artifactual synchrony between the cells.”

In all of the animal studies, they found that the artificial, coiled-coil protein produced a brighter signal than the naturally occurring peptide that they tested. Boyden says it’s unclear why the coiled-coil proteins work so well, but one possibility is that they bind to each other, making them less likely to travel very far within the cell.

Boyden hopes to use the new molecules to try to image the entire brains of small animals such as worms and fish, and his lab is also making the new indicators available to any researchers who want to use them.

“It should be very easy to implement, and in fact many groups are already using it,” Boyden says. “They can just use the regular microscopes that they already are using for calcium imaging, but instead of using the regular GCaMP molecule, they can substitute our new version.”

The research was primarily funded by the National Institute of Mental Health and the National Institute of Drug Abuse, as well as a Director’s Pioneer Award from the National Institutes of Health, and by Lisa Yang, John Doerr, the HHMI-Simons Faculty Scholars Program, and the Human Frontier Science Program.