Visual art has found many ways of representing objects, from the ornate Baroque period to modernist simplicity. Artificial visual systems are somewhat analogous: from relatively simple beginnings inspired by key regions in the visual cortex, recent advances in performance have seen increasing complexity.

“Our overall goal has been to build an accurate, engineering-level model of the visual system, to ‘reverse engineer’ visual intelligence,” explains James DiCarlo, the head of MIT’s Department of Brain and Cognitive Sciences, an investigator in the McGovern Institute for Brain Research and the Center for Brains, Minds, and Machines (CBMM). “But very high-performing ANNs have started to drift away from brain architecture, with complex branching architectures that have no clear parallel in the brain.”

A new model from the DiCarlo lab has re-imposed a brain-like architecture on an object recognition network. The result is a shallow-network architecture with surprisingly high performance, indicating that we can simplify deeper– and more baroque– networks yet retain high performance in artificial learning systems.

“We’ve made two major advances,” explains graduate student Martin Schrimpf, who led the work with Jonas Kubilius at CBMM. “We’ve found a way of checking how well models match the brain, called Brain-Score, and developed a model, CORnet, that moves artificial object recognition, as well as machine learning architectures, forward.”

Back to the brain

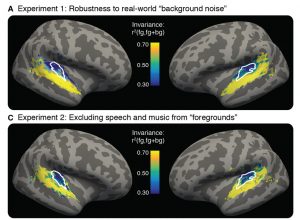

Deep convolutional artificial neural networks were initially inspired by brain anatomy, and are the leading models in artificial object recognition. Training these feedforward systems on recognizing objects in ImageNet, a large database of images, has allowed performance of ANNs to vastly improve, but at the same time networks have literally branched out, become increasingly complex with hundreds of layers. In contrast, the visual ventral stream, a series of cortical brain regions that unpack object identity, contains a relatively minuscule four key regions. In addition, ANNs are entirely feedforward, while the primate cortical visual system has densely interconnected wiring, in other words, recurrent connectivity. While primate-like object recognition capabilities can be captured through feedforward-only networks, recurrent wiring in the brain has long been suspected, and recently shown in two DiCarlo lab papers led by Kar and Tang respectively, to be important.

DiCarlo and colleagues have now developed CORnet-S, inspired by very complex, state-of-the-art neural networks. CORnet-S has four computational areas, analogous to cortical visual areas (V1, V2, V4, and IT). In addition, CORnet-S contains repeated, or recurrent, connections.

“We really pre-defined layers in the ANN, defining V1, V2, and so on, and introduced feedback and repeated connections” explains Schrimpf. “As a result, we ended up with fewer layers, and less ‘dead space’ that cannot be mapped to the brain. In short, a simpler network.”

Keeping score

To optimize the system, the researchers incorporated quantitative assessment through a new system, Brain-Score.

“Until now, we’ve needed to qualitatively eyeball model performance relative to the brain,” says Schrimpf. “Brain-Score allows us to actually quantitatively evaluate and benchmark models.”

They found that CORnet-S ranks highly on Brain-Score, and is the best performer of all shallow ANNs. Indeed, the system, shallow as it is, rivals the complex, ultra-deep ANNs that currently perform at the highest level.

CORnet was also benchmarked against human performance. To test, for example, whether the system can predict human behavior, 1,472 humans were shown images for 100ms and then asked to identify objects in them. CORnet-S was able to predict the general accuracy of humans to make calls about what they had briefly glimpsed (bear vs. dog etc.). Indeed, CORnet-S is able to predict the behavior, as well as the neural dynamics, of the visual ventral stream, indicating that it is modeling primate-like behavior.

“We thought we’d lose performance by going to a wide, shallow network, but with recurrence, we hardly lost any,” says Schrimpf, “the message for machine learning more broadly, is you can get away without really deep networks.”

Such models of brain processing have benefits for both neuroscience and artificial systems, helping us to understand the elements of image processing by the brain. Neuroscience in turn informs us that features such as recurrence, can be used to improve performance in shallow networks, an important message for artificial intelligence systems more broadly.

“There are clear advantages to the high performing, complex deep networks,” explains DiCarlo, “but it’s possible to rein the network in, using the elegance of the primate brain as a model, and we think this will ultimately lead to other kinds of advantages.”